The director shared details about the virtual production side of ‘The Mandalorian’ at the Unreal Engine User Group at SIGGRAPH.

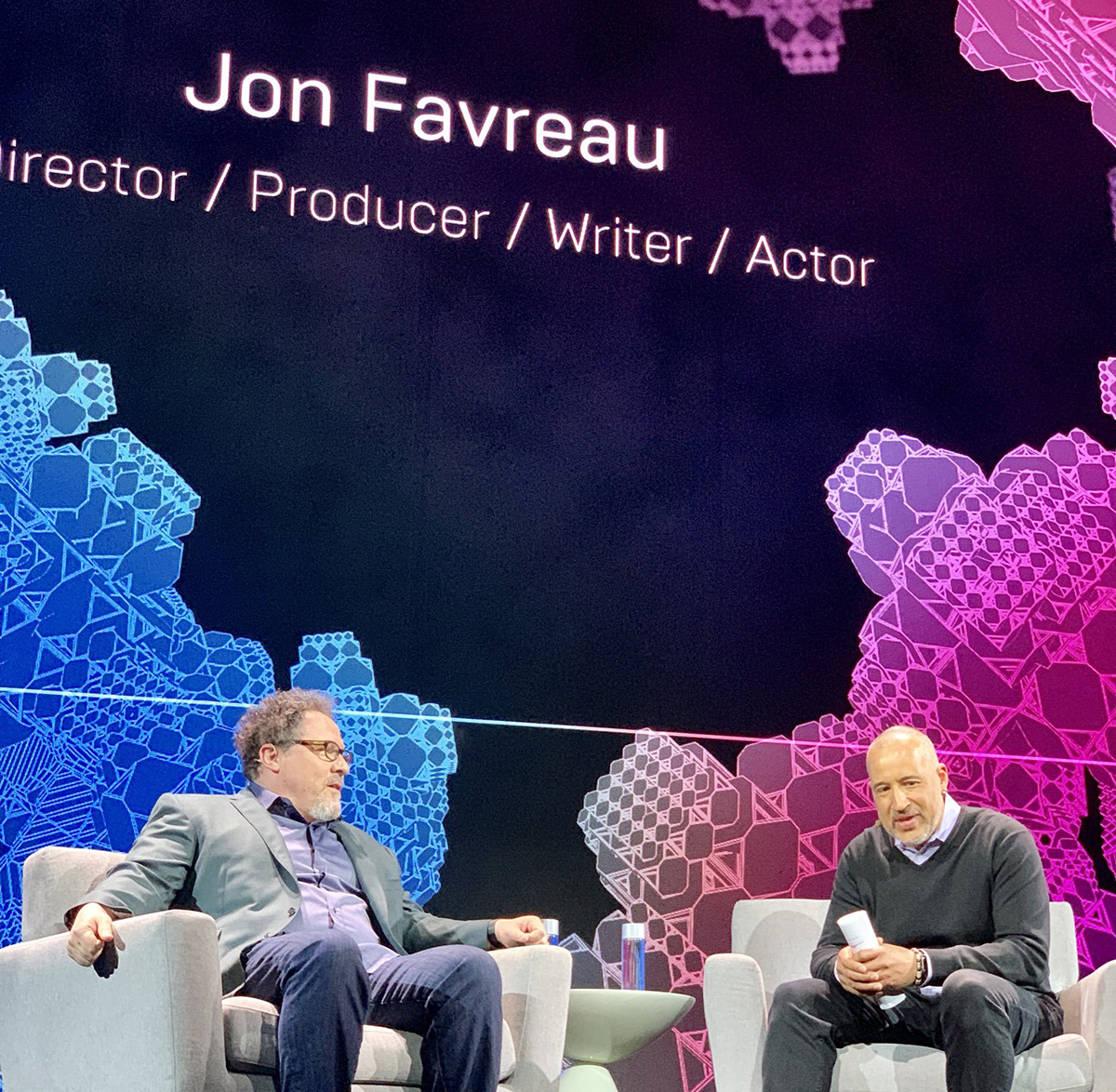

The surprise guest at Epic Game’s Unreal Engine User Group at SIGGRAPH 2019 in LA was Jon Favreau, and he made a major splash at the event. With The Lion King, The Jungle Book, and, soon, The Mandalorian under his belt, Favreau has become particularly close to virtual production.

In a fireside chat with Epic business development manager Miles Perkins, Favreau shared details of his own journey into virtual production, including his VFX and VR experience, and why he embarked with a team of collaborators into real-time and virtual production in a big way on The Lion King and The Mandalorian.

Favreau’s appearance at the event was a big surprise, left-right until the end (some audience members who had started embarking onto the next SIGGRAPH party of the night quickly ran back into the Orpheum Theater in Downtown LA). The director was clearly among a captive audience, who were hanging on pretty much his every word).

I loved, in particular, his perspective as a director on how virtual production techniques have aided in his filmmaking, and how they grew out of a few of his frustrations with traditional visual effects photography. The talk also ended up being a fun look back at his own journey into virtual production.

The Jungle Book experience

Favreau said that it was on Jungle Book, “where we were moving greenscreens around, [that] I started to get very frustrated with how long it took in production. Also there was so much CG environment, that no matter how much you planned it, you were still pushing a lot of the decision-making to post-production.”

The director admitted it was nagging him, whether he could work within a better greenscreen stage where the screens didn’t need to be moved around and where they weren’t just relying on a film camera. We all know things would change drastically for The Lion King, but before he got there, Favreau was introduced to VR via his Jungle Book animation supervisor Andy Jones who was working with Wevr on some VR experiences.

“I was really excited by it, just as a medium, not as a production tool,” he said. “And I saw The Blue, which is the one with the whale, which is really cool, it just was very striking, and I saw the potential of what VR could do. I started actually working with them on a project called Gnomes and Goblins.”

“At that point we were starting to mess around – at the end of Jungle Book – we started messing around with this consumer facing VR equipment and started to develop camera tools, creating basically a multiplayer filmmaking game in VR.”

Jumping into The Lion King

Using The Jungle Book and the Wevr experience as a jumping-off point, Favreau and his team adopted virtual production and VR whole-heartedly for The Lion King. “We built a tool set with Magnopus, and we were working with a lot of different people, different vendors, different platforms. We came up with system by which we did the camera capture for Lion King using filmmaking tools within VR, so that it wasn’t going to be just a VFX person on a box, but it was going to be Caleb Deschanel as my cinematographer.”

“I would have James Chinlund as my production designer,” added Favreau, “and we would build our sets, upload it into VR and you would scour around in VR and have this iterative process where we’d go to the location just like you would in a live action shoot, and discuss camera angles, pick lenses, and Andy would do the animation. So he pre-animated everything and we would shoot it – we’d stand there looking at the lions, and we would shoot it using [these tools].”

The style of shooting in this virtual production way brought a live action feel to The Lion King, of course, but it also made a big difference to the director in terms of the production process, notably, with the notes process.

“Usually,” Favreau said, “in post production you’re just giving notes. By the time that note gets from your supervisor to the person in the department, to the person on the box, they’re just going off a checklist, and they’ve worked so hard, and there’s such a big workload. [On The Lion King], we would get the shots back and instead of giving it a layout note, we would pull it back and operate [the equipment] and run the wheels. And then we just said, ‘Here, this is it,’ and then let them conform it and do the render on top of it.”

“When you save those iterations, you can save so much work with the people who are actually the technicians and the artists. I think it’s debilitating to have so much work being done that doesn’t hit the screen. So it maximises the efficiencies, and also I think creates a sharper, more focused, collective of artists that are working on the vision.”

How this is being used for The Mandalorian

Favreau is a busy man. In addition to just completing The Lion King, he’s also been executive producing The Mandalorian television series, where real-time and virtual production is being used extensively, including in partnership with Epic and ILM. First, it’s being used in the planning stages as a kind of previs. “We used the V-cam system where we get to make a movie, essentially in VR, send those dailies to the editor, and we have a cut of the film that serves a purpose that previs would have,” explained Favreau.

Then there are Mandalorian’s LED video walls, which are, in some cases, essentially displaying pre-rendered content and being filmed to provide in-camera composites. Favreau actually thought the LED walls were only going to be used for interactive lighting or as dynamic moving greenscreens (partly to solve his frustrations from Jungle Book). But he then noted Epic and Unreal Engine’s capabilities in delivering real-time rendered frames that could be shown on the LED walls, and be tweaked and moved to deal with parallax shifts and quick on-set decision changes.

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]We got a tremendous percentage of shots that actually worked in-camera, just with the real-time renders in-engine.[/perfectpullquote]

Said Favreau: “We got a tremendous percentage of shots that actually worked in-camera, just with the real-time renders in engine, that I didn’t think Epic was going to be capable of. For certain types of shots, depending on the focal length and shooting with anamorphic lensing, there’s a lot of times where it wasn’t just for interactive – we could see in camera, the lighting, the interactive light, the layout, the background, the horizon. We didn’t have to mash things together later. Even if we had to up-res or replace them, we had the basis point and all the interactive light.”

The benefits were many, said Favreau. It meant doing quick turnarounds on a TV schedule and budget, and it also provided a major benefit to the actors and the crew.

“For the actors, it was great because you could walk on the set, and even if it’s just for interactive light, you are walking into an environment where you see what’s around you,” stated Favreau. “Even though [the LED walls] might not hold up to the scrutiny if you’re staring right at it from close up, you’re still getting peripheral vision. You know where the horizon is, you feel the light on you. You’re also not setting up a lot of lights. You’re getting a lot of your interactive light off of those LED walls. To me, this is a huge breakthrough.”

“And it would fool people,” he added. “I had people come by the set from the studio who said, ‘I thought you weren’t building this whole set here,’ and I said, ‘No, all that’s there is the desk.’ Because it had parallax in perspective, it looked, even from sitting right there, if you looked at it casually, you thought you were looking at a live action set.

On the future of real-time and virtual production

Favreau is definitely a convert and a proponent for the use of real-time rendering engines and virtual production on set and in pre and post production. In fact, he suggested this could all have a large democratization effect.

“I think it creates this dynamic environment for creative types, and not just people that have the resources of a studio, [just] people with a gaming system. It feels like everybody coming to set is bringing their best self to the set. So, your cinematographer isn’t lighting to something that is greenscreen that’s going to happen later. They’re in there, telling you little intricacies, and I think, part of that, at least from what I’ve observed, is those happy accidents. Those things [where] because someone liked something a certain way, or those kinds of little things. That’s the magic of filmmaking.”

“I think it also forces you to make creative decisions early and not kick the can down the road,” he said. “Because [typically] you go to a set, you put up a greenscreen, you figure it out later. But here you have all of these brilliant people [and] we have a hundred years of experience making cinema, why abandon that just because we’re disrupting the set? Let’s inherit the skillset of these great artists and build tools out.”

This week at befores & afters is #realtimewrapup week, with reports on real-time tech and virtual production, direct from SIGGRAPH 2019.