Behind the virtual production from CBS VFX for the HBO Max docuseries ‘One Perfect Shot’.

In episode one of One Perfect Shot, the docuseries based on the popular Twitter account, director Patty Jenkins walks around a virtual re-creation of the WWII No-Man’s Land environment from her film, Wonder Woman.

This was the result of the director being filmed against greenscreen and tracked into a synthetic landscape, complete with synthetic actors, crafted by CBS VFX. During the shoot, Jenkins was able to walk around the digital set in and behind explosions, props and objects, since the environment was live-composited on the day of filming.

To find out more about how CBS VFX made this possible for the Ava DuVernay, ARRAY Filmworks, Warner Bros. Unscripted Television and HBO Max docuseries, befores & afters spoke to Craig Weiss, executive creative director / visual effects supervisor for CBS VFX and Jim Berndt, who leads the virtual production group there.

They discussed the company’s proprietary Parallax virtual production system, which has been combined with Epic Games’ Unreal Engine and stYpe camera tracking tools to allow for the virtual set process, placing filmmakers Jenkins, Aaron Sorkin, Kasi Lemmons, Jon M. Chu, Malcolm D. Lee and Michael Mann into scenes from Wonder Woman, The Trial of the Chicago 7, Harriet, Crazy Rich Asians, Girls Trip and Heat.

b&a: What were you asked to do for One Perfect Shot?

Craig Weiss: When they came to us they wanted to do something a little bit more unique for a documentary. They wanted to take advantage of some of the immersive technology that exists today that really hadn’t been used necessarily in documentaries. It was pitched as something like where the presenters on the Weather Channel really walk around things.

Our core business has always been visual effects, but we’ve been doing a lot in the virtual production space for quite a while, not necessarily LED walls, but greenscreen, including real-time greenscreen composite with Unreal Engine backgrounds. So when they came to us with the idea, we actually went out and spent a little time over a break and came back and pitched what we thought we would like to do.

Originally they were referencing frames from The Dark Knight and other movies. We took one of the frames from The Dark Knight and went ahead and built out the corner there in Chicago where Heath Ledger’s character walks into the bank. It really got their attention. They saw the potential of this.

b&a: How much fidelity, how much photorealism, did you aim for? I really like where you settled at.

Craig Weiss: The goal was never from the beginning to ‘fool’ the viewer and think they were back on the movie set. That wasn’t the goal, to say, ‘Hey, this is your in the movie frame.’ We always wanted to treat it as a stylization to get a level of photorealism that kept you there. But at the same time, we couldn’t create a feature level quality for every shot or every leaf that was in there. So I think we found the right balance.

b&a: Jim, coming to you, what did you need to solve using existing tools for getting each director inside that environment?

Jim Berndt: It’s always challenging because we have certain constraints, which are usually the desired frame rates and locking our environments to what they’re doing with different cameras on set. Typically, you have a free-form Steadicam and also a crane camera that you want to allow the creators to move anywhere in that environment. So when we first started designing these environments, we had to pay a lot of attention to what we called the sweet spot, because these are very large environments. They can go on for blocks and miles sometimes.

We have a great team of artists that put a lot of effort in trying to make a particular level of resolution for what we’re going to display on set and then find the right spot to register when the director walks into the location.

Craig Weiss: One thing to mention here is that when you’re doing visual effects, you get really detailed on matching the lighting of the environment you’re putting your live action actor into, say. Whereas, in this show, they were aware of that, of course, but then also always wanted them to look good. Because if somebody’s walking around in a darker environment and you really want to match them realistically, they won’t come through. That was a trade-off and a compromise to get the lighting to match the environment they were in.

b&a: How did shooting take place on the day?

Craig Weiss: First, what we found was the ability for the director and DP to be able to just ‘camera hunt’ and look for things and see that ‘real’ set behind, just changes everything. Even though we know it’s not a final product, they gain a lot of confidence, and they seem to be more engaged when we’re doing that, than just shooting on greenscreen and adding everything later.

Jim Berndt: For this specific show, what we settled on doing was a technical rehearsal day, where we test the environments, make sure that everything loads correctly and it performs at the desired frame rate. And then a rehearsal day with the creatives, just to plan out the shoot so that they would predetermine locations for the cameras and for the talent as they would be on set. And then the actual shoot day, where everything just came together.

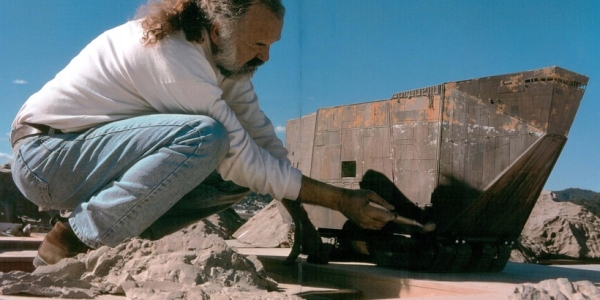

Here what we did was combine camera tracking, but also object tracking. For example, the director signs their name at the end, or they could walk around objects, behind explosions, or in front of other CG attributes. Or they could pick something up that was a prop that they wanted to talk about for a specific film.

That then added a little bit of complexity, but with the custom software that we use and the tracking company that we use, I think we were pretty successful at pulling it off in a way that everybody was pleased with.

b&a: And those environments, are you building those up in a very traditional way in Max or Maya? And then are they run as Unreal Engine assets?

Craig Weiss: It was a hybrid. Unreal has some really great modular assets and Megascans that work really well for certain things, plants, trees, fields. They do some really wonderful grass and trees, which seemed like a perfect fit for So for Harriet, that seemed like it was a natural fair for that.

For Heat, on the other hand, we had to match two blocks of downtown LA. Every car and every building had to look exactly right. So that was a traditional pipeline, where we modeled in Maya and then used a lot of Substance textures and brought that in. Then something like Crazy Rich Asians was a hybrid approach. The cathedral we modeled, but we used some Unreal Engine assets for flowers. Girls Trip was something that had to be matched as well, so that was more traditional.

b&a: I was curious for some of the more recent films say, Wonder Woman, whether you wanted to, or whether it was available, to use a LIDAR scan of the set, or even a point cloud render of the digital environment. Was that something that was necessary, or you even looked at doing?

Craig Weiss: We did. When we first started this, we asked, ‘What’s available?’ We talked about getting scanned sets from the VFX companies. But we knew we had to create one asset that could work on set with the director and also work in post as an asset for rendering with ray-tracing. We didn’t want to have multiple assets. So we knew that anything that would’ve been available would’ve served as a template only. If we have had a LIDAR scan or a layout, it would’ve saved a lot of time. But by the time that they get a hold of these things, we were already on our own way.

b&a: What kind of tracking approach was there on set?

Jim Berndt: We have been working with a company based out of Croatia called stYpe that provides tracking for mostly large scale sports events. So they’re very well seasoned when it comes to live events, where things cannot go wrong. At the same time, they’re creating their own solutions and offerings for tracking for Unreal and for Unity and whatever engine you need in the backend.

Craig Weiss: One thing I might add is I think one thing that was a real benefit for the show creators was that once we had this environment, we could do cutaways. We literally brought in the showrunner and would have a virtual camera, and we’d be there on our stage and we could go and block out ideas for shots this way. They’d say, ‘Oh boy, I’d love to go from here to here.’ We could just literally do that in a session and record that, and then deliver that off to them as supplemental footage that they could use.

b&a: Was there anything else that you particularly wanted to mention that I didn’t ask about with the production?

Craig Weiss: Well, we did explore LED walls in the beginning, and we quickly ruled that out, just because of time. Also, a lot of what we did here was head to toe, with the director walking around in between, in front of, behind, objects, which would’ve made it impossible.

Some people might ask the question, why didn’t you do stuff on an LED wall? But an LED wall is when you’re head to toe and you’re dressing the ground. It’s a practical ground. And so, we looked at that for about a minute and then said, ‘Oh, it could never happen.’ Especially when you literally had two days in between shooting episodes and you would have to clear everything and re-dress it.