How virtual production toolset ThruView was used on the new HBO show.

Have you watched a scene set inside a moving train carriage in the past few years? It’s highly likely the scene was filmed on a static carriage set with bluescreen or greenscreen outside the windows, sometimes with interactive lighting running on the set.

That approach means, of course, the sequence could be filmed at a controlled location at any time. With the moving backgrounds (perhaps live-action or a digital environment) later composited into the windows in post-production.

But what if you could film train carriage scenes in a controlled setting with the necessary outside environment, and interactive lighting, achieved right there, i.e. in-camera?

Sam Nicholson’s Stargate Studios has implemented exactly that with its ThruView system. It’s a setup that taps into the current virtual production trend. It is relying on interactive footage, 4K screens, and connected lighting running on the set with the aid of real-time rendering. And it means, too, the possibility of eliminating greenscreen and compositing in post.

Nicholson has been demo’ing ThruView at a few events recently, and it’s on full view in the new HBO show, Run.

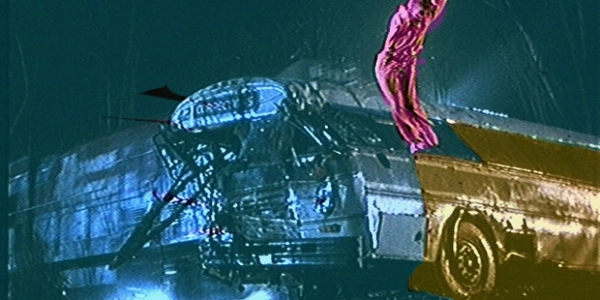

Here, the system had used for moving train scenes in which live-action actors on a dining car interior performed. While 4K screens displayed outside imagery through the train windows. Lighting, reflections and the imagery itself controlled dynamically. In the end, the utilization of ThruView for 3,000 shots and 40 train windows during production.

Virtual production beginnings

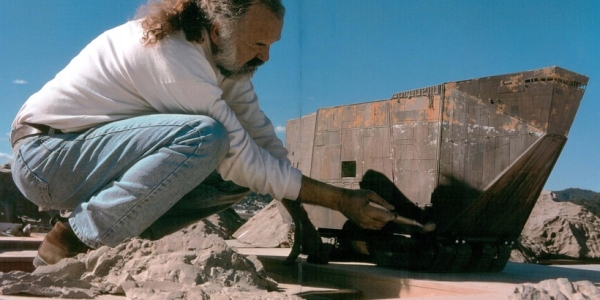

Despite the recent proliferation of virtual production projects, Stargate Studios itself has a long history in the field. Shows like Pan-Am and Beauty and the Beast saw the studio implement a ‘virtual backlot’ approach for shooting on greenscreen, with live compositing of the environment. It came into play for a test done for the Star Wars: Underworld television show, too (years before The Mandalorian took advantage of LED walls and real-time rendered sets).

Still, notes Nicholson, there remained an issue several years ago in general with virtual set approaches; it didn’t result in ‘final pixels’. “You had this problem, which was that you spent all sorts of money and time getting a good previs. If you shot it all on greenscreen, you were able to do some things that let people see what they were doing, and it was great, but then you had to spend that money all over again in post. So, financially, greenscreen virtual production, unless you can achieve finished pixels, did not make financial sense.”

What has changed, says Nicholson, is that technological leaps and bounds were made in areas such as GPU-based rendering (ThruView relies on several NVIDIA RTX 6000s) and video input/output (the system employs Blackmagic Design 8K Decklinks). Real-time game engines also changed the game (Run utilized Epic Games’ Unreal Engine), ultimately enabling multiple 8K streams to be displayed on the monitors used for the show, at up to 60 frames per second if necessary.

How to make it work for ‘in-camera’

ThruView relied upon for more than just the streaming external imagery, too. By incorporating Unreal Engine into the mix for off-axis projection, the imagery would properly display as any parallax changed. The system also able to incorporate pixel-tracked DMX lighting, meaning the lighting on-set slaved to what the projected image was.

“Imagine you’re in a train and it goes into the tunnel – all the lights have to go out,” outlines Nicholson. “And in other scenes, the sun is always moving and the trees are casting shadows. So, the integrated kinetic lights system is a very big part of ThruView, as well as spatial camera tracking, which is markerless and wireless. It lets people shoot with three or four cameras at once.”

“The proprietary technologies of ThruView are,” continues Nicholson, “custom markerless and tracker-less camera tracking, which is affordable on multiple cameras. It integrated pixel-tracked lighting, as well as very high density pixel throughput for, say, 40K images. So if you’re shooting in 8K, for instance, everything can be finished at 8K, which is amazing.”

Aside from having the effects imagery ‘finished’ in the photography, one of the advantages of ThruView on Run was providing those on set with a more immersive feeling during the shoot. “It’s a huge advantage with a real-time process like ThruView for the actors and the director,” says Nicholson. “They get to see it while the scene is happening. It’s a real creative collaboration and partnership on set, where you’re literally doing a visual mix live. And that’s exciting. It’s a real-time mix.”i

Doing more upfront to save down the line

One of the intentions of the use of ThruView on Run was the idea that the shots would be ‘finished’; with ‘final pixel’ VFX through the train windows. That would mean not having to spend money in post-production to do the compositing. But, of course, it meant spending money in pre-production to produce the imagery to project on the screens during the shoot and to set ThruView up for shooting.

There’s also a new aspect of this kind of production. It intimately involves a collaboration across departments. Especially with the art department and the director of photography. “It really is such a radically different approach than what you would normally do,” states Nicholson. “We now say, instead of ‘fix it in post’, it’s ‘fix it in prep’. And that means that producers need to release visual effects money in pre-production. Because you have to shoot plates and you have to construct models that have to be ready when you walk on set.”

On Run, Nicholson says the show’s makers were willing to commit to the ThruView technique months in advance. “Which gave us time to shoot, time to organize the plates and be ready on set. It’s much more akin to production design and construction. At that point because everybody’s used to the fact. If you don’t build a set, you’re not going to have anything to shoot on.”

Nicholson also notes that there remained a swathe of visual effects work on the show, including for shots outside the train windows (this was carried out by Stargate Studios). The shots related to continuity, timing, lighting, and adjustments to the imagery. “This is not a panacea and you can throw out greenscreens and throw out post production,” acknowledges Nicholson. “You need very high-level artists who can go in and thread the needle of matching the look. Making small adjustments, adding or moving certain things once everything’s cut together.”

Indeed, Nicholson is at pains to point out that this kind of set-up not suited to every shooting situation, however. “If you have one greenscreen shot, forget it, put up a green rag and go. But if you have a 100 shots or 200 or a thousand shots, then there’s a great advantage to doing it in-camera. You get the shallow depth of field, camera shake, motion blur, kinetic lighting. They all are things which we avoid in greenscreen, but now they become your friend.”

Want to know more about virtual production and Unreal Engine? Watch the video here: Virtual production and the future of storytelling