The infamous 1997 film had its own form of bullet-time, before bullet-time.

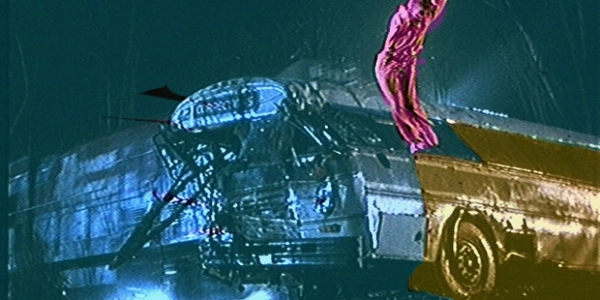

The film might have a notorious place in the history of comic book adaptations, but Joel Schumacher’s Batman & Robin, released 25 years ago this week, is notable for some innovative visual effects work. In particular, the ‘frozen in time’ moments arising from Mr. Freeze (Arnold Schwarzenegger) blasting his ray gun on unsuspecting victims capitalized on photogrammetry, stereo imaging and still-new CG techniques.

This was the work of Warner Digital, led by senior visual effects supervisor Michael Fink and visual effects supervisor Wendy Rogers. Lead CG artist Joel Merritt brought to Warner Digital a technique he had already developed to help accomplish the ‘frozen moment’ shots – something that now might be called image-based modeling.

Effectively, the technique was required because the shots involved moving cameras, going from live-action right into the frozen moment. This required a digi-double of the actor, animal or object, which came via a multi-camera set-up, photogrammetry and derived digital model.

One thing to remember is that this was before the famous bullet-time effect achieved in The Matrix, but after a number of frozen time rigs had been first developed and used in commercials and films, and after some significant stereography and image-based modeling work done by Buf (which also happened to work on Batman & Robin). Paul Debevec’s research on image-based modeling was also out there by this time, and ILM had been capitalizing on its own projection mapping techniques for several years.

Interestingly, too, Warner Bros had asked some of the filmmakers from Mass.Illusion (who eventually worked on The Matrix as Manex Visual Effects) about a ‘Mr. Freeze Effect’ in the mid-90s. You can find out more about that here in befores & afters’ podcast interview with Frank Gallego about the bullet-time rig.

Meanwhile, with Batman & Robin now celebrating its 25th anniversary, befores & afters is re-publishing this interview with Merritt originally published at vfxblog for the 20th celebration.

Prior to Batman & Robin, what you had been working on that led to you coming to Warner Digital?

Joel Merritt: Sometime back in the late 1980’s, I had an Amiga 1000 and that was, of course, for its time rather advanced as far as graphics is concerned, and I realised for example, one morning in early 1988 that if you painted something that today we would call a Z-buffer, for each pixel you could put a colour associated with it and you can have like a three dimensional object that you could rotate about and so forth. I began to do more investigation into that sort of thing, of mapping – I didn’t even know it was called a Z-buffer back then. I just realised that you could essentially have a pixel at a given depth and then you could rotate a virtual camera and you could see perspective and so forth from that.

I had done something where I took an aerial photograph and then combined that with elevation data and then you could render it from a view, just starting with the furthest away line and keep drawing, keep drawing, keep drawing as you went towards the camera. Essentially a paint algorithm. And then you could fill in the details, you could sort of fill in what the elevation would look like from a virtual perspective. I don’t remember exactly how I came up with the idea of using the multiple textures, but it was sometime in the late 80’s or early 90’s. I was home working on the Amiga, and building essentially these multiple views, which you could do then.

You had multiple cameras, you had multiple images on the screen, you’ve figured out where the cameras are, you’ve figured what the field of view is. When you click on the given pixel really what you’re doing is you’re shooting a ray out of that camera through that pixel out into the environment. When you shoot two or more rays, maybe they don’t perfectly intercept but what you can do is a least squares problem, where you basically say, ‘What point is best fit where those rays are shooting, where they come closer to intercepting?’

What you can do then for these pixel rays that are coming out is you can figure out a three dimensional point in space and if you connect enough of these three dimensional points in space, like maybe, not a huge amount by today’s standards, but maybe a hundred, then you can start building an outline of where the various objects are. Then what you can do is, each one of these photographs is essentially a texture that can be glued on. The next thing you can do is go into a mode where you connect three of these dots, these three dimensional things, points so that you can make triangles.

You build the triangles up and then you have a crude object, a low resolution version of the surface of the object. I had done some facial tests, I originally had done these in 1990 on my Amiga. And then I did some additional stuff where I built a low resolution version when I was working at Discreet Logic and then rotated it about, basically put the two textures on and rotated.

Around that time, there was a growing sense of the use of photogrammetry or stereo imagery to acquire imagery – and Paul Debevec for example had done his thesis on this area – and there was some other frozen moment work being done, too, in commercials and other projects. Was any of that under your consideration in coming up with the technique?

Joel Merritt: I certainly had some understanding of photogrammetry and stereo imagery techniques, but I didn’t have a deep understanding of either at the time. When I started this I had very little formal computer graphics training. I started graduate school at the University of Illinois at Chicago in fall 1988. Intense learning of computer graphics followed, but at the time, photogrammetry or stereo imagery were not yet widely integrated into computer graphics. I was doing research on volumetric data from MRI images.

The general terms for this sort of thing in CG are now called image-based modelling and image-based rendering. As I recall, the steps I took to develop the technique were:

1988: mapping images to specific depths using a z-buffer.

Circa 1989: My memory is fuzzy on this, given that it was almost 30 years ago. Take several photographs of a person. The person rotates as the photographs are taken. When you are done you have front, right, left, and back views, as well as 3/4 views. Now the idea is to build 6 z-buffers, representing orthogonal views of the person. You could think of these as defining an object made up of little cubes about the size of a pixel, kind of like Minecraft. Instead of a volumetric data set where the object was made of little cubes (voxels) filling in the entire object, this object only had little opaque cubes on the surface. I called them saxels, for surface area elements. Then the image pixels were mapped to the saxels and blended based on the virtual camera position.

Late 1990: I got rid of the saxels and used points and triangles instead. You could find the points by triangulation, and three points make a triangle, as long as they’re not all on the same line. This made building the model much easier. Images were still blended based on the camera position.

1994: same technique with full color on an SGI machine at Discreet Logic.

Another thing to be aware of is that on the Amiga in 1988 you could render a virtual camera from a rotated perspective, but it wasn’t real time rotation like it is now. It took over a minute to render a single frame.

And how did you then come on board for Batman & Robin?

Joel Merritt: What happened was, Wendy Rogers was the visual effects supervisor on Batman & Robin, for Warner Digital’s component. Warner’s contribution was the Mr. Freeze on people effects, not to be confused with the Mr. Freeze on Gotham City effects.

I was working on another movie called, My Fellow Americans, and I was doing some texture mapping tricks there. It was supposed to be set at the White House, so obviously, we had to do some matte paintings and so forth to make it look like it’s the White House, and there were some tricks I was doing with texture mapping and perspective. I think that may have caught the eye of Wendy Rogers and I started basically explaining the existing software I had, the existing technique I had. I may have brought in a demo of the technique with the face and showed it to her, and shortly thereafter I was on the team doing this stuff – putting the software together as well as working with the people who were making the tracking software at Warner, and actually going on stage with a Total Station to record various 3D points so we could derive where the camera was.

What was the specific solution for Batman & Robin you and the team came up with?

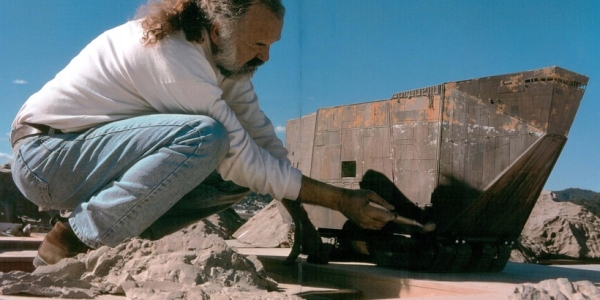

Joel Merritt: The software basically generated the actual elements of the people frozen with the virtual camera. The scene was shot with three cameras that were filming the person being frozen. These were actually 35mm film cameras that were synchronised together, so they were in phase, not just in sync but actually in phase so all the shutters were open at the same time.

That way what they could do in editorial is they could go through and they could say, ‘Yeah, we want to freeze it right here on this frame.’ That way you would have the frame that would be picked by the editor, I presume in consultation with the director and then that was our frame, and then the three frames at that time point were the ones that we would build the model from.

What we would do then is, as a result, we would have a low dimensional OBJ file – it was some sort of a model of the object of the person frozen. Then we had this virtual camera and we got three texture maps basically generated from the virtual camera and there was a blending solution that would allow you to blend between camera A, camera B, et cetera.

That was essentially how it was done and then that was handed off downstream to the people who were working on the other things. There was, for example, another lead TD who was working on the ice, which of course, was refractive and so forth, so that was real CG. Then there was a person who was working on the freeze ray, then there was a person who was working on the mist, and then there was a person who put it all together.