Nexture, with some help from A.I., may well be what you’re looking for.

I was recently interviewing the team from Milk VFX about their work on Good Omens when they mentioned a tool called Nexture from French-based Cronobo VFX. It was being used to help the team achieve detailed texture maps for a couple of their CG characters. Curious, I asked Cronobo for more information about what Nexture was and what it can do.

It turns out that Nexture is a brand new tool aimed at offering up ways for artists to add minute details to CG models, and it’s using artificial neural networks in the process. The tool works by combining the neural network with a custom image synthesis algorithm to transfer details from a reference pattern bank onto CG texture maps. We asked Cronobo founder Rémi Bèges to give us the background on Nexture.

b&a: Can you give me a brief background to Cronobo and Nexture?

Rémi Bèges: Cronobo is solo-founded, I created the company two years ago after graduating with a PhD in electronic engineering and signal processing. Cronobo is based in Toulouse, most often known for being the home of Airbus. There is a lot of engineering talent here.

I’m not from the VFX field and the reason why all of this happened in the first place is a bit unexpected. I’ve always been programming and experimenting with image generation algorithms. The first software I ever wrote was a C++ library of pseudo-random noises (Perlin, Simplex, that sort of thing). Cronobo started as a pet-project that turned out a bit more ambitious than anticipated. A few years back, I got interested in texture transfer techniques, that’s very well known in the image processing field, and it’s very interesting stuff, absolutely fascinating. It’s this kind of problem that’s rather easy for a human to guess a solution, but it’s not trivial to create an algorithm to solve it.

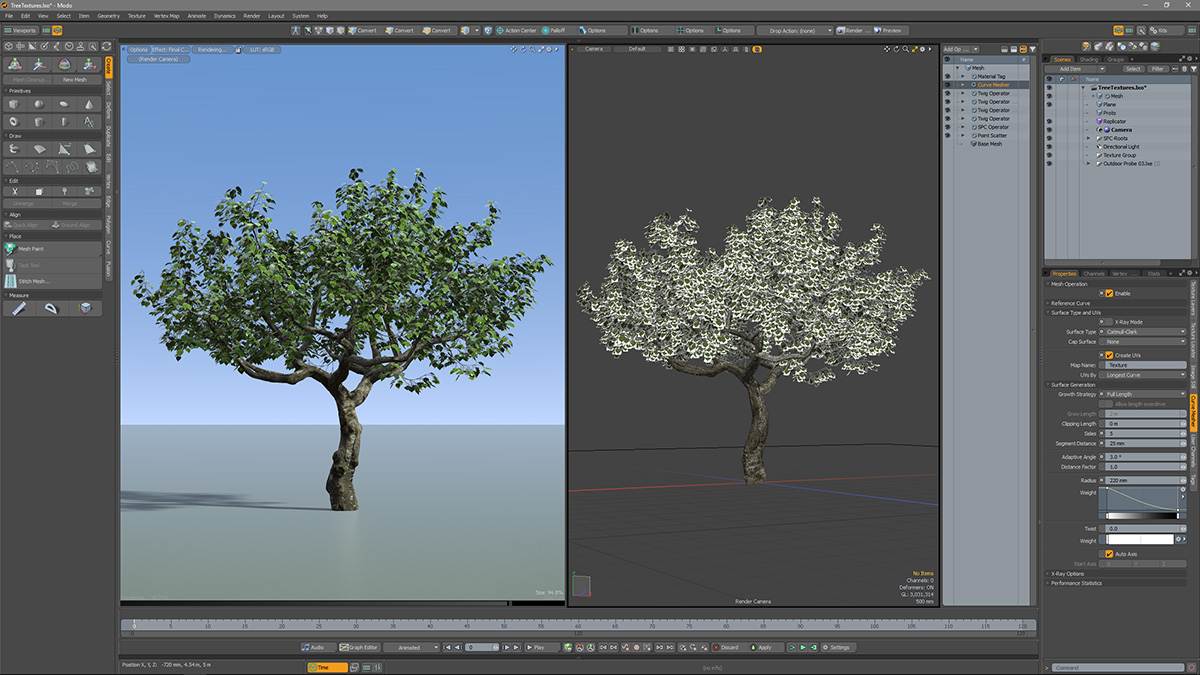

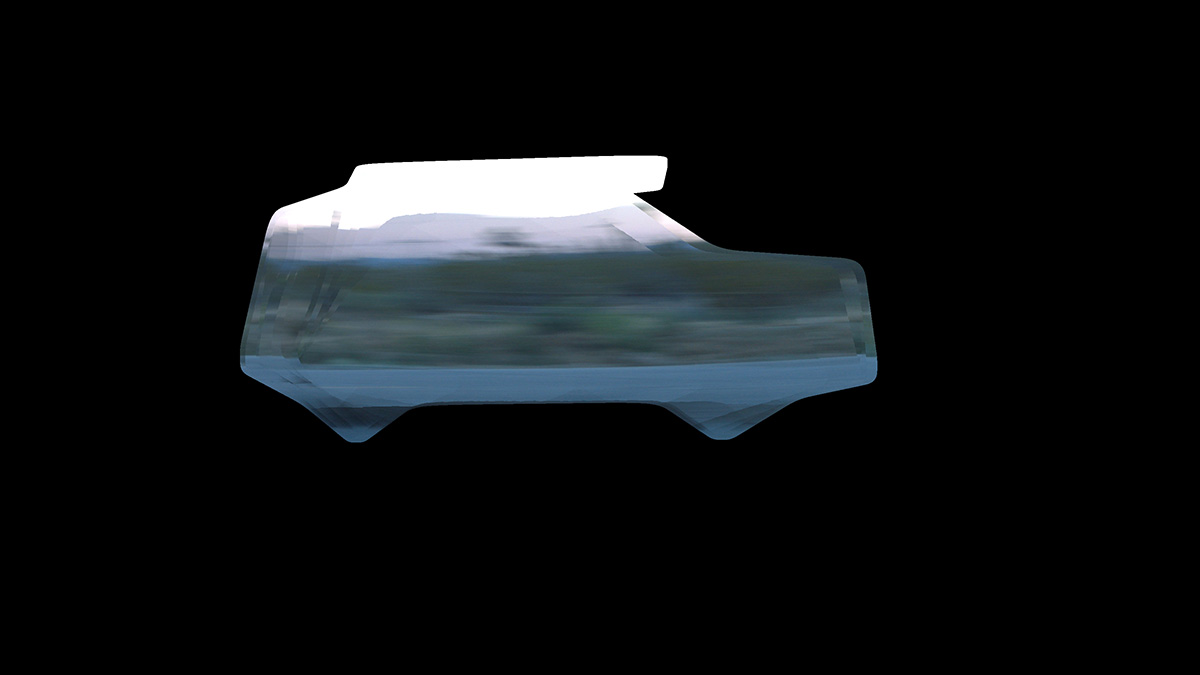

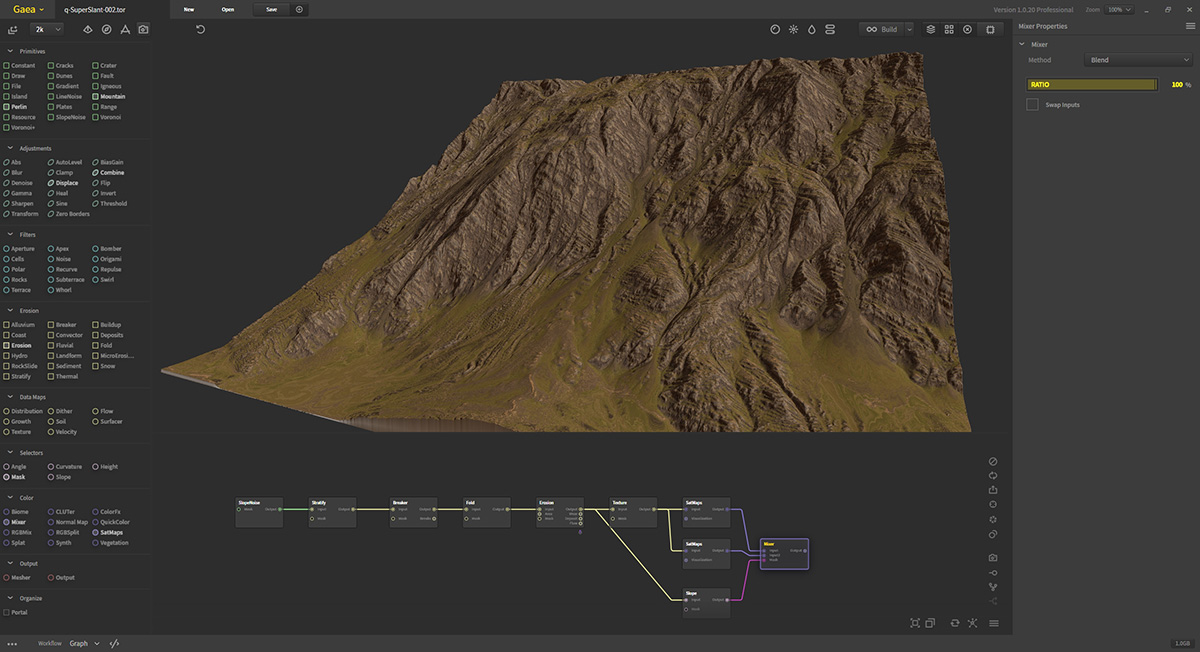

3D renders / 4K displacement courtesy of Solal Amiri Yvernat.

Texture transfer consists of taking the appearance or style of a source image, and applying it, ‘painting it’ in a sort of way, on a target image. It implies that you can separate the ‘contents’ of an image (fundamentally, the shapes, edges, etc.) from its style (its texture, overall appearance).

After trying many things out and experimenting a lot with different texture transfer approaches, I had come up with a tech capable to transfer multiple style images on a selective portion of a target image. A relative, working in the VFX field, mentioned this was extremely interesting and might likely be worth pushing further. After probing things for a while I decided to quit my job, create an actual company and pursue this as a commercial venture.

The prototype was named Nexture, as in Neural-Texture or Next-Generation-Texture.

Since then, I’ve taken the company to a startup incubator in Toulouse that has a team of collaborators with various skills. They help founders get work done every week on marketing, BizDev and business topics. It frees up a lot of time for me to focus on the tech and reach a first production-ready version.

So far, approximately 40 companies have shown direct interest in Nexture, ranging from VFX studios to video game companies and third-party actors in the visual effects field. A first range of alpha trials were conducted and the results were amazing. The ergonomics was pretty bad, but they all loved the quality that’s produced by the software.

Since Cronobo is not a company composed of VFX veterans, I have to do things a bit differently. Listening to 3D artists is paramount, and I’ve been amazed by how open-minded and helpful artists and studios have been. As such, I always focus initially on core features, and listen to all artists for workflow and ergonomics. This is absolutely fundamental to have artists enjoy the product and get something productive done with it.

b&a: What did you see as the gap in the market for Nexture?

Rémi Bèges: The way I see it, one of the current challenges in visual effects is scalable quality. On one side, it’s a highly competitive field, and VFX studio profits are tight. Margins for errors are very thin, and it’s vital that projects come out on time. I come from a different field, so it’s pretty impressive to see the amount of work and the hours that teams are pulling off. It’s not as common as you might think.

But on the other hand, this is artistic work, and an excellent baseline quality is required, because the audience is accustomed to it. Also, TVs and movie screens are improving quickly and keep getting larger and more dense, with 4K and IMAX. So the quality of visual effects must follow the trend, and as a consequence artists work on forever larger surfaces.

Some time ago, for a pitch presentation, I calculated the equivalent surface of a texture in the real world. It turns out that the texture of a main character on average represents an equivalent surface of 4-10 tennis courts, with a millimeter resolution. Sometimes way larger for main characters. That’s huge.

So, the challenge is to reach quality at scale.

And Nexture fits very nicely in this, it helps you focus on how you want your texture to fundamentally look like. Where Nexture shines the most is for adding non-repetitive details on an existing texture, in a non-destructive fashion. It removes the need to manually stitch between different patterns, which is quite time-consuming and has to be done all over again when specs change last-minute.

Nexture automatically figures out transitions between different visuals, without any quality loss and no visible transitions. If tomorrow there’s this one part of your detailed texture you want to change, maybe the details are too large and you want to scale it down, or the visual does not work and needs to be replaced, it’s super fast and easy with Nexture. Within 5-10 minutes later you’ve got another production-ready texture.

3D renders / 4K displacement courtesy of Solal Amiri Yvernat.

b&a: Can you explain how it works? What kind of AI is behind it? What were some of the toughest challenges in developing it?

Rémi Bèges: Fundamentally, it’s an iterative process. It starts from a random noise and changes all values at every step, until a satisfying image is obtained. The key here is how ‘satisfying’ is defined in the software, and it’s a tough beast.

There are multiple criteria. One of them defines how the generated map looks similarly enough to the original texture. Another measures how the style of generated map also looks similar to the style of the ‘Nextures’, the images to transfer. Combining those criteria together tells the optimizer how right or wrong it is for every pixel of the image, and therefore how to change the color of each pixel in a proper direction.

AI in itself is a very wide topic, but more precisely Nexture is using Artificial Neural Networks (ANN). The algorithm leverages the capability of ANNs to analyse images and extract high-quality information. It also uses some interesting properties of the neural space (by opposition to the pixel space), where simple mathematical operations become powerful image transformations. The neural nets are mainly used to calculate how perceptually different two images are in a robust fashion.

b&a: Where do you see Nexture working best, say in terms of realistic human skin, or more fantastical creatures, or both?

Rémi Bèges: So far, Nexture was mostly showcased for human skin or creatures, but it’s not specific to skin at all. It can be used anywhere. As long as you have textures that require intensive detailing, it’s suited for the job.

An animation studio was interested in using it for wood textures for a close-up plan, for which they suspected they’d need to re-render the shot later from another POV (and therefore re-detail the texture of the background tree afterwards). I’m really curious to see what people will come up with this, artists will very likely come up with new surprising applications.

b&a: Can you say what studios are using Nexture at the moment and any shows we’ve seen it in?

Rémi Bèges: Good Omens is the first official production-use for Nexture. Frankly, the team at MilkVFX has been incredible and the feedback they provided was invaluable. It’s amazing that they achieved to use Nexture in production, at the time of the alpha, the software was I reckon very, very difficult to use. It did not even have an interface! They really took the time to test the software, investigate and help catch early bugs. The quality of Nexture would not be anywhere near what is today without their help.

There’s also a few folks at Pixar that are investigating using it into their pipelines, and many other studios in VFX, video games and VR. Nobody is up for breaking a well-established production pipeline, so it takes time, and I try to have Nexture fit as much as possible into the existing process. We’ll get there eventually.

3D renders / 4K displacement courtesy of Solal Amiri Yvernat.

b&a: What are you plans for Nexture right now?

Rémi Bèges: Right now, the next big milestone is finishing up the new major version of Nexture. There will be a big announcement in a few weeks, but the first big change is the release of a proper interface. A new round of trials will be proposed to studios as a beta-stage, to collect feedback and improve ergonomics quickly. Finally, the plan will be to open-up the software to freelance artists who have been neglected thus far.

Check out more new VFX tools in our #vfxtoolsweek series, including the Eddy NUKE plugin and a new way to grow trees.