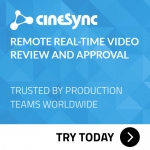

all about Where that NUKE plugin for simulating gaseous phenomena came from.

You may have read a few recent VFX articles about projects in which software called ‘Eddy’ has been mentioned, particularly where there’s been shots requiring smoke, fire or gaseous fluids (read befores & afters’ coverage of Avengers: Endgame and Game of Thrones season 8 for mentions of Weta Digital’s effects simulation work with Eddy, for example).

The thing is, Eddy is not a typical piece of fluid or effects simulation software.

In fact, it’s a plugin for NUKE.

Dreamed up around four years ago, Eddy, from VortechsFX Ltd, is written entirely on the GPU, and that means it has some crazy-fast turnaround times and gives users the ability to iterate on sims, often at compositing time, and that means iterations are possible much closer to when the final shot is delivered. It essentially lets compositors treat the sims like they are volumetric card elements.

Weta Digital, Framestore, MPC and Digital Domain, among several other studios, are now Eddy users. We asked VortechsFX Ltd co-founder and director Christoph Sprenger all about the history of the tool, from inception to current development (you can also jump right to the end if you want to know right now where the name ‘Eddy’ originated from).

Eddy: a brief history

Christoph Sprenger (co-founder and director, VortechsFX Ltd): We’re essentially four guys – Andreas Söderström, Niall Ryan, Ronnie Menahem and me – who have been doing Eddy for about four years now.

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]We wanted to try a completely different twist on everything.[/perfectpullquote]

We started Eddy as a pet project where it was like, ‘OK, let’s learn a bit about GPU programming,’ because it’s very different to what I was doing at the time at Weta Digital, where I was working on writing Synapse [Weta Digital’s volumetric effects simulation software]. I’d always done fluids on the CPU across multiple machines, but never really got into GPU programming.

I saw that cards were getting bigger and bigger in their memory size, which is an important thing for GPU programming, especially if you want to do fluid simulations, which are notoriously memory intensive.

So we decided, let’s just give it a go, inspired obviously by ILM’s Plume as well. So it started off as a bit of a pet project, but we also wanted to try a completely different twist on everything.

The twist? NUKE

The first people I told about this, they were like, ‘You’re crazy. Why would you put a fluids solver into NUKE?’ The motivation was really quite simple. See, I started off in the ’90s and everybody was a bit of more of a generalist back in the day. So you kind of had to do everything. And I found that over the years we got really turned into specialists. So for instance in modelling, you have the hard surface and organic modeller, then in effects the destruction, fire and water expert, everyone started to specialize in specific areas.

I don’t feel that that is necessarily a great idea because if you don’t have water effects in the project then you’re not really required. At the same time, the pipelines got very established around these paradigms. You have the effects artists using the effects software, then you have the lighting artists using the lighting tools and the renderer that they need to know about and then you have the compositor at the end.

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]The first people I told about this were like, ‘You’re crazy. Why would you put a fluids solver into NUKE?’[/perfectpullquote]

Now, with volumetric effects, they’re a bit harder to light. Volumes behave slightly differently when the light scatters through it. So it can often take several iterations to see the result and the look you’re after. And if you don’t really integrate it with the final plate to begin with, it’s often doesn’t sit in there well as transparency can be hard to judge. Also the VFX supervisor might say to you, ‘Okay, I don’t like it, I need more detail.’ And then you send it all the way back and then you go through these disciplines again, and the process repeats:, ‘The effects artist does the cache, I do the lights, the VFX supervisor approves it or not, and then rinse and repeat.’”

And if you think about that, that’s actually quite a costly way of working because you have three to four seats involved in the worst case scenario and different software packages, and you still don’t necessarily have that fast turnaround at the end where you can just really interactively tune something and just be an artist and kind of shape pixels closer to the final thing.

In fact, that’s the credo behind it: how about we move some of this work all the way to the end and try to simplify some of these workflows so that they’re accessible to compositors? It’s not easy to do this and I think we still have a lot far away to go to make this easier to use. But that was kind of the principal idea.

Tech considerations

At the same time, another big aspect was deep compositing. Deep compositing is something that not many companies use because it’s very expensive in terms of the data amount that you produce. It is even more expensive if you need to deep comp volumetrics because you require Deep RGBA data to handle transparencies correctly. It is almost a frustum buffer in itself. So the data that you produce is almost limiting for certain people. Yes, it’s a great idea, but it can be so heavy that a comp’er suddenly has to peel in 800 megabytes per frame and that doesn’t really sit well with all pipelines.

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]How about we move some of this work all the way to the end and try to simplify some of these workflows so that they’re accessible to compositors?[/perfectpullquote]

So the idea was, how about we just bypass this whole thing and just render directly into your deep buffers so you don’t have to write it to disk, and just directly output the deep data in NUKE as part of the rendering, and then composite live, rather than having to extensively write and read it from disk.

Initially one of the considerations was, should we write something that targets high frame-rates using algorithmic shortcuts or take high-end algorithms and see how fast we can get them on modern GPUs? We were thinking that approximations might not have the lifespan and certain facilities want to work as unbiased as possible and consider that an important feature.

Some people don’t care, really. They say, ‘Please, just make it go fast.’ But certain other companies just really want to raise the bar and those features then become important. So instead of watering things down, we tried to not do that and tried to rely on the fact that if you write the right set of algorithms, ideally they get faster by better GPUs coming out.

The first incarnations of Eddy

We really didn’t show it to anybody at first. We just kept going. We were flying under the radar for quite some time and we were pretty confident in what we were doing.

What we said to ourselves was, ‘It needs to be done from start to end properly,’ because if you just do the fluid solver, that’s one part, but then if you can suddenly also render those very quickly and you can do this as part of a compositing pipeline it becomes a lot easier to sell the message.

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]You just really want to give the visual effects supervisor the flexibility of saying, ‘Can I just quickly get this element?’[/perfectpullquote]

Once we had that, we showed it to Weta Digital and they were really supportive of the idea and that was kind of a real morale boost for us. They did a lot of testing there and they started using it in production.

There are a lot of little things that you don’t necessarily want to bog down effects artists with, whether it’s chimney smoke in background or little things that are not necessarily your superhero spaceship explosion that needs to be meticulously crafted and simulate until your CPU burns down. So you just really want to give the visual effects supervisor the flexibility of saying, ‘Can I just quickly get this element?’ and it doesn’t have to be sent through the pipeline.

I think the artistry is very important and I think the best artists that I’ve seen are the ones who have done a lot of mistakes in their career by simulating the wrong settings over and over again until they get the right intuition. Things like fluids, you don’t learn by reading a manual, you learn it by doing a lot of mistakes and running the wrong settings until you figure this out and have an intuition for it.

The best artists I’ve seen are the ones who have that intuition and you really just get that by making mistakes. In essence, the faster you can make those, the more quickly you might become a better artist. So that’s one of the key motivations behind Eddy was to use GPUs that let you iterate as quickly as possible. It’s nowhere near real-time, but it’s fast enough that, compared to other systems, you can make a lot of iterations very, very rapidly.

Eddy’s strength, and limitations

The main limitations of Eddy are, well, there’s some limitations around NUKE in terms of a 3D viewport. I’m glad it’s there, but compared to Houdini or Maya or other 3D packages give you, it definitely could need some more work. So if you’re a 3D artist who wants to use it, they might find that a bit of a, ‘Oh wow, this feels a bit awkward at first…’.

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]We’ve done a big re-work from going from version 1 to version 2, and Eddy 2 was really about trying to make the engine as standalone as possible.[/perfectpullquote]

For me, from an effects point of view and a simulation point of view, memory is a big deal. Those graphics cards are pretty massive now. You can get a GPU with 48 gigabytes of RAM which is pretty insane. And Eddy also supports multiple GPUs that can be linked together, sharing the RAM for certain areas like simulation.

But most people can’t afford those cards, so if you have a standard or lower-end card, you might not be able to run your super large planet scale explosion. That said, again, Eddy is more focused on trying to cover areas like smaller scale effects.

Eddy does things like direct volume rendering. So, for instance, you can render implicit functions directly without actually voxelizing anything. So you can craft fancy fractals and render those directly without the renderer having to build up a frustum buffer or anything, which means clouds and other things you can resolve with really high details without it having to go, let’s say, into a VDB cache or anything. Which was a big thing for us where we said we want to do volume rendering the hard way, rather than saying, okay, we can only render the caches in voxelized structures. The idea was that you can express everything as a math function or add math functions to existing voxel caches and volumetrically composite everything together.

Learning to use Eddy

There’s a learning curve to Eddy and the learning curve can be steeper, depending on which areas you’re looking at. So we didn’t want to water the system down in case you are a very senior artist and would be like, ‘That’s great, but I want to do more.’ And so we approached it this way, to say, ‘Okay, it needs to do all these things and then we’ll find better ways of packaging things up.’

[perfectpullquote align=”right” bordertop=”false” cite=”” link=”” color=”” class=”” size=””]We really didn’t show it to anybody at first. We just kept going.[/perfectpullquote]

I’ve seen really good use-cases where senior TDs that maybe have a strong effects background package things up. In NUKE, for instance, you can make gizmos where you collapse entire networks down into a new node, into a macro, and only expose very few settings that you want to. For us, there’s still a lot of work to be done in that field to make it simpler and identifying which areas are hard to use.

We’ve done a big re-work from going from version 1 to version 2, and Eddy 2 was really about trying to make the engine as standalone as possible. It’s obviously integrated into NUKE, but it also allows you to instantiate it outside NUKE or script it up and integrate it into custom pipelines. The NUKE plugin was entirely written with the public API that we ship with. So we put a lot of effort around this because we know certain companies really are keen on customizing things and having everything opened up behind the scenes so they can get in there and extend it and script custom things.

Why ‘Eddy’?

So, yes, we did call it Eddy because that’s all about the vortex of a fluid, like a swirl of wind. Some people think it’s a reference to the older Softimage compositing tool called Eddie. But our Eddy is about trying to stir things up. It’s a countercurrent, essentially.

Not to be overly philosophical about that, we knew that it stirs the pot a bit and it rubs against the streamline of where everybody else is going, and we wanted to do something slightly different. It’s nice to see that it helps for these areas that we thought would be good for it. Time will tell if people use it more and more, but the hope is that this concept of working closer to the final pixel makes more sense for VFX artists.

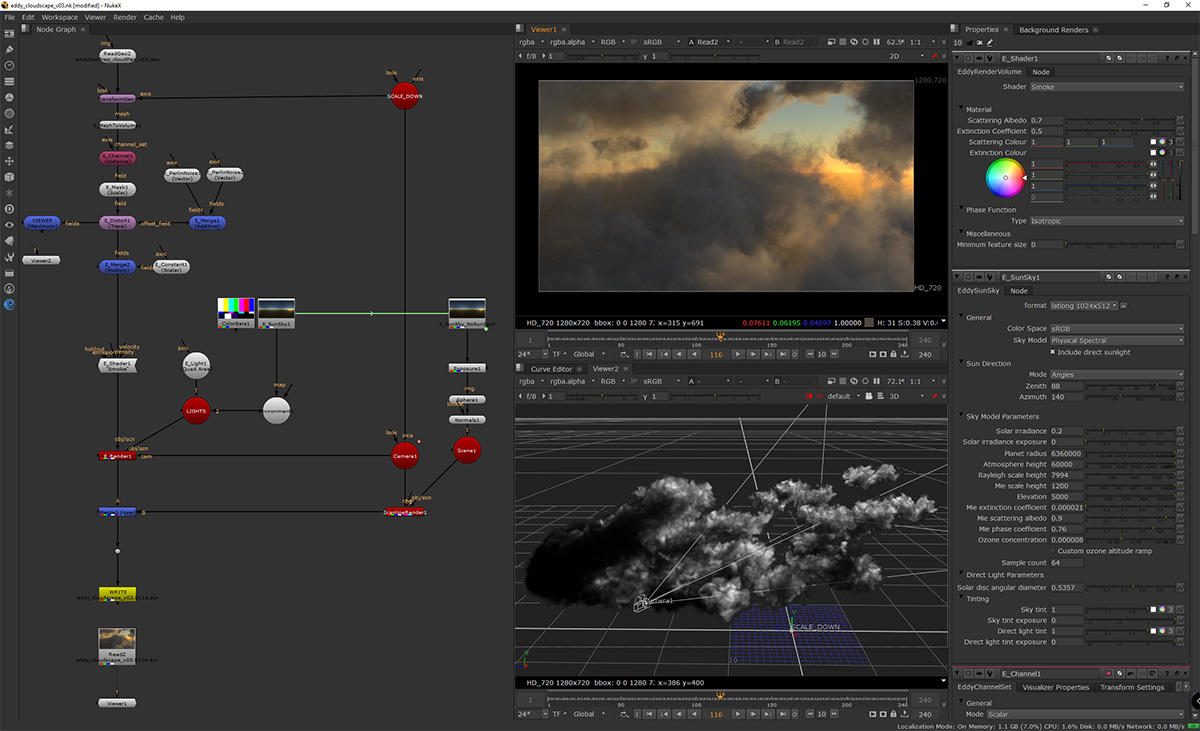

Check out more new VFX tools in our #vfxtoolsweek series. Coming up: making worlds, making characters, making trees.

Very good. Immersive..