Where did those chrome balls used in VFX come from?

Important note: In this VFX Firsts podcast, the film that is suggested to be the first to use chrome balls was not, in fact, the first to use chrome balls. I apologize for this error.

The initial topic of the podcast was perhaps a conflation of two ideas related to chrome balls and HDRIs, and a more accurate description of the recorded podcast would be: ‘What was the first film to use a chrome ball for HDRI lighting?’

There are now more details below that flesh out the questions of what film was the first film to use a chrome ball, and, when were they first used for HDRI lighting? I’ve included a comment from ILM’s John Knoll explaining his early use of a chrome/gray ball set-up, and also extensive notes from Paul Debevec on his own research into reflection mapping and his key role in the advent of using chrome balls for HDRI lighting.

Chrome balls. Mirror balls. Light probes. They sometimes go by many names, but they all do pretty much the same thing: aid in capturing the lighting conditions on set and in re-creating those conditions on your CG character or CG environment.

What’s more, if you shoot the reflection in one of those balls at multiple exposures, you can combine the images to form a panoramic HDRI that can be used for reflections or image-based lighting (IBL).

It should be noted, of course, that chrome balls can be used for reflection maps and lighting reference without HDRI (i.e. High Dynamic Range Image-Based Lighting), and HDR IBL can be done without chrome balls since the HDR panoramas can also be shot with fisheye or regular lenses and then stitching.

So, where did this use of chrome balls in visual effects come from? And what was the first film to use them? When, also, were they first used for HDRI lighting?

This week on VFX Firsts, I look to answer some of those questions with expert guest Jahirul Amin, founder at CAVE Academy, and a former VFX trainer at DNEG.

There are major spoilers in these show notes (which now include a whole bunch of new info not directly discussed on the podcast), but you can listen first to the podcast directly below. Also, here’s the RSS feed.

1. Paul Debevec’s research: a starting point and much more

A first stop on the journey into the history behind chrome balls, particularly in relation to how they are now used for HDRI lighting, needs to be Paul Debevec’s insights into reflection mapping.

At that link, you’ll find Debevec’s own notes about early reflection mapping which he discovered was developed independently in the early 1980s by Gene Miller working with Ken Perlin, and also by Michael Chou working with Lance Williams. For example, at NYIT, it was Michael Chou who carried out the very first experiments on using images as reflection maps. For obtaining the reflection image, Chou used a ten-inch ‘Gazing Ball’. This was a shiny glass sphere with metallic coating on the inside. Most people will have previously known these as lawn ornaments.

Gene Miller used a three-inch Christmas tree ornament, which was held in place by Christine Chang while he took a 35mm photograph of it. Gene Miller provided Debevec with extensive notes about this.

Debevec’s image based lighting and HDRI research then began to ramp up. See this link relating to IBL research showcased at SIGGRAPH ’98. Debevec’s Fiat Lux from SIGGRAPH ’99 is another key milestone in this history. Of course, Debevec has continued his IBL research in so many ways, and many people will be familiar with the Light Stage. Here’s Debevec’s original SIGGRAPH ’98 HDR/IBL talk:

Since this podcast was recorded, Debevec has now provided these extensive notes to befores & afters about his own research:

What my SIGGRAPH 98 paper tried to contribute was to show that HDR spherical panoramas — shot from a chrome ball as one example of a light probe — can be used as the entirety of the lighting on a CGI object using image-based lighting. It provided a unified way to record both direct and indirect illumination sources, and to simulate every type of reflectance property an object can have: diffuse and specular reflections, self-shadowing, subsurface scattering, interreflections, and the shadows and bounce light and caustics that an object may cast onto the environment. My paper showed a new way that chrome balls can be even more valuable for lighting and compositing, and it provided the first follow-the-steps-and-it-works process I know of that reliably lights a CGI object the same way it would have looked as if it were really in the scene.

Debevec also makes several other crucial observations in this history, and we are incredibly grateful to be able to publish them. See below:

- Chrome balls were introduced for reflection mapping in the research world in the 1980’s, and that’s great you include my reflection mapping history in your article. Director Randal Kleiser remembers seeing the blobby dog from Gene Miller’s reflection mapping experiments at SIGGRAPH, and that helped inspire the metal morphing spaceship’s reflection-mapped appearance in 1986’s Flight of the Navigator. Randal recalls that this, in turn, helped inspire James Cameron to conceive of the metal morphing T-1000 in Terminator-2. They didn’t use chrome balls though, the reflection maps were pieced together from imagery in the background plates.

- I recall from a San Francisco SIGGRAPH chapter presentation that Blue Sky studios used a mirror ball for reflection maps on the cockroaches in Joe’s Apartment which came out in 1996. And I’m pretty sure that a more exhaustive search would come up with more examples of chrome balls being used to record reflection maps in feature films in the late 1980’s and/or early 1990’s, since the technique was published at SIGGRAPH in the early 1980’s and pretty well known after then.

- The early applications of using chrome balls for reflection mapping in films weren’t examples of modern-day HDRI lighting capture or image-based lighting simulation. They took the literal image from the mirror ball and texture-mapped it onto the CGI object based on the surface normal to produce the appearance of a specular reflection. It didn’t record the full range of the lighting, or record the proper colors and intensity of direct light sources, or simulate the full range of reflectance properties an object can have, or simulate how an object photometrically interacts with its environment.

- I think the main contributions of my SIGGRAPH 98 paper are 1) shooting full dynamic range, linear-response, fully spherical images, using HDR images of a mirror sphere as one of the ways to record the full field of view. I called such a device for recording such an HDRI map a “light probe”. And the other main contribution was 2) to show that such a spherical HDRI image can be used as an image-based light source in a global illumination rendering system, and can thus accurately light a CGI object with the light of a real-world scene, including all of its various reflectance properties. Some other key contributions were 3) to map the HDRI map onto a rough 3D model of the environment around the object to better simulate the directions the light is coming from and 4) the Differential Rendering technique to cast accurate shadows (and other lighting effects including indirect light and caustics) onto the scene due to the presence of the inserted object.

- I think there were two separate kick-offs for innovation: the original reflection mapping innovation which started in the late 1970’s / early 1980’s and got the VFX industry using mirrored spheres for reflection mapping, and the HDR Image-Based Lighting contributions from my SIGGRAPH 98 paper and films Rendering with Natural Light and Fiat Lux, which showed you could do a lot more with images from a Chrome Ball in terms of lighting.

- The Campanile Movie actually didn’t use any HDRI lighting or mirror spheres, it was pure image-based rendering, and came out before my 1998 SIGGRAPH paper on image-based lighting. Shooting the photos for it did make me wish that my (film) camera shot in higher dynamic range, and I was starting to wonder how I could realistically composite new objects into the scene.

- The first place where a lot of people saw HDRI lighting in action was when “Rendering with Natural Light” lit by the light of the UC Berkeley Eucalyptus Grove shown at the SIGGRAPH 98 Electronic Theater. My Berkeley friends Carrie Wolberg and Michael Malione told me my film lit up ILM’s internal discussion board, and that got me my first ILM speaking invitation in September 1998. My work was something ILM was able to consider as they developed the lighting and shading techniques used on Pearl Harbor, and their ambient occlusion approximations to full HDR image-based lighting were designed to get a reasonably similar result with less computation. Nowadays, full HDR IBL is used more commonly since it can reproduce all of the proper effects of lighting and shading, especially in terms of the detail in self-shadowing and cast shadows.

- The use of a diffuse sphere in addition to a chrome sphere for lighting capture is also interesting. It’s another useful form of lighting reference and I liked John Knoll’s half-and-half sphere (see below) when I heard about it a few years ago, though I’m not sure how they used the two images in practice. I started including diffuse spheres in my lighting capture experiments at Berkeley in 1999 around when we were making Fiat Lux, with the idea that the appearance of the lighting on a diffuse sphere could be used to recover clipped highlight information on a mirror sphere. I completed this work at USC ICT and I presented this new process “Capturing Light Probes in the Sun” in our SIGGRAPH 2003 course on Image-Based Lighting and in the Image-Based Lighting chapter of the HDRI book. (You can also reconstruct the sun color and intensity on a clipped chrome ball image from the appearance of a neutral square on a color chart, if you know its orientation into the lighting environment.) In 2012 I showed a way to mash up a mirror ball and enough of a diffuse ball to recover multiple clipped light sources in “A Single-Shot Light Probe“. Richard Edlund thought it was cool.

- Something that traditional chrome balls and HDRI lighting capture falls short of is recording the full spectrum of the incident illumination, which can be helpful for getting the right color rendition of the lighting when combined with Spectral Rendering. Back in 2003, we shot a multispectral HDR panoramic light probe image by placing a spectroradiometer on a pan/tilt rig during the work for our EGSR 2003 paper. And later at SIGGRAPH 2016, we showed that by including one or more color charts with a mirror ball, you can derive the necessary spectral information about the incident lighting to properly drive 6-channel LED lights in a virtual production system. (The three-channel RGB LEDs in most LED panels result in terrible color rendition.) This makes it possible to reproduce an actor’s appearance in any real lighting environment without having to perform any color-correction in post.

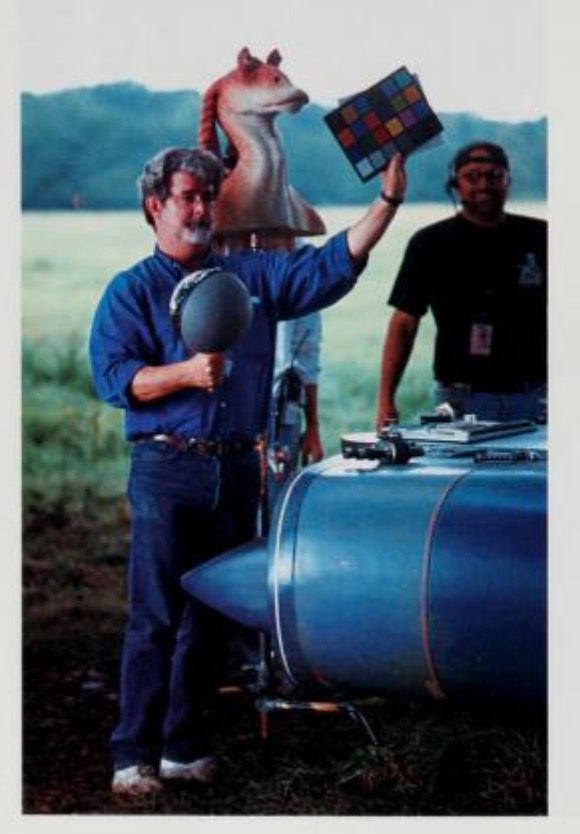

2. John Knoll’s gray/chrome spheres

Although we do not deal with this in the podcast, ILM’s John Knoll utilized a gray/chrome sphere set-up as early as 1996’s Star Trek: First Contact. Below, I have replicated a comment Knoll made on the original post, explaining his set-up, which was used in an even more significant way on The Phantom Menace (1999):

I had the first gray and chrome sphere made for plate shooting on Star Trek: First Contact.

I was inspired by something I had seen Dennis Muren do on Casper. For every setup they shot a matte white sphere as a lighting reference.

I thought that was a really good idea, but felt that adding a chrome sphere would let you extract reflection environments and see more specifically how the set was lit. I also thought that a black shiny sphere would capture the overexposed peaks that would be blown out in the chrome sphere and better help you locate them. I talked this idea over with Joe Letteri who rightly pointed out that the white sphere would overexpose too easily and that a middle gray sphere would give me the same information with better range.

Thinking through how this would work on set (and how I would carry this to set) I decided that a single sphere with a matte gray side and a chrome side would be less unwieldy than three separate spheres, and if the single sphere could split apart into its component hemispheres and nested together, the whole thing could be pretty easily portable.

ILM’s model shop built me the first one using a blown plexiglass hemisphere from Tap Plastics, and a chromed steel wok purchased from a local cooking supply store.

These references proved really valuable to me in post and helped us do somewhat more accurate lighting.

My next show was Star Wars Episode 1, and I made shooting the gray and chrome sphere a standard part of every VFX setup. Dan Goldman wrote a nifty little tool that we used on Episode 1 that unwrapped an image of a chrome sphere into a latlong that we could use directly when rendering shiny objects.

All of the other ILM shows at the time took up the same practice, and the spheres became part of the standard location plate kit.

I suspect that PR images from Episode 1 or maybe word of mouth from ILM artists spread this idea across the industry. As you note, they’re now everywhere.

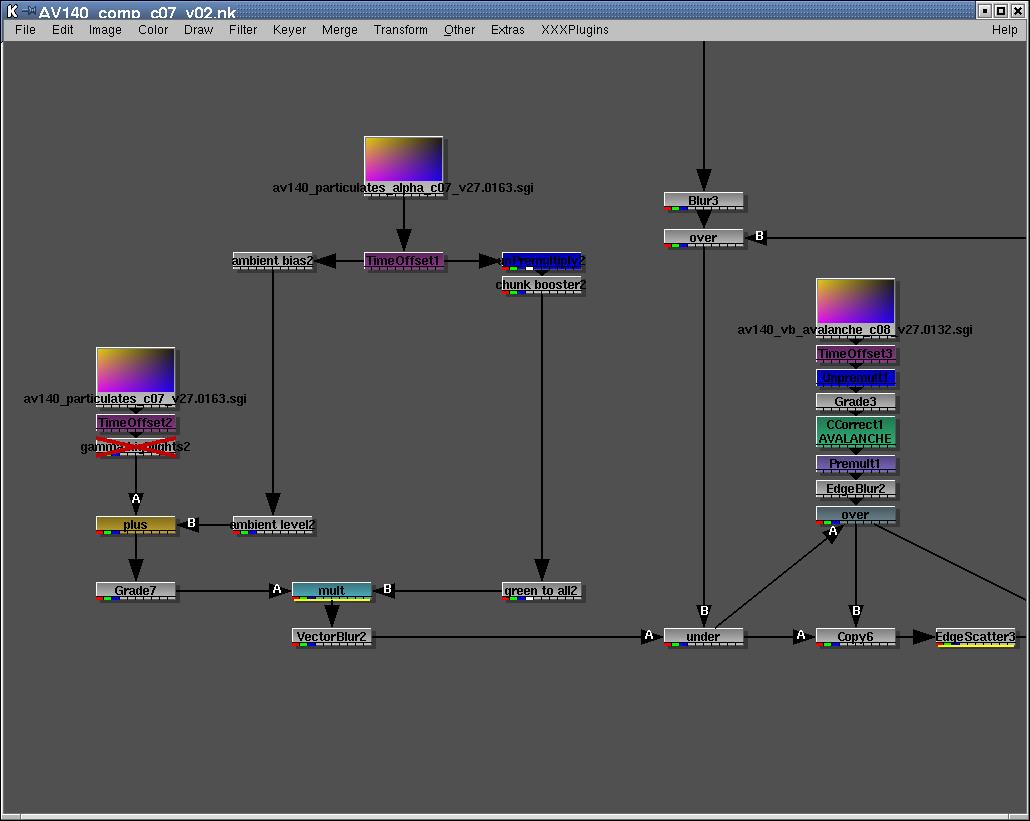

3. X-Men (2000)

The VFX shots done for X-Men (2000) by Digital Domain (VFX supe: David Prescott) appear to be the first time a feature film VFX workflow was directly influenced by Paul Debevec’s HDR Image-Based Lighting work, that is, the 1998 SIGGRAPH paper which used chrome balls as light probes to capture HDRI maps for Image-Based Lighting. Debevec provided befores & afters with these notes on Digital Domain’s work for the movie:

At Digital Domain, Sean Cunningham used the on-set mirror ball to light the Senator Kelly melting death sequence, using a paint program to guess what the shorter exposures would look like. And the late, great Andy Lesniak used image-based lighting techniques to illuminate the Statue of Liberty from some panoramic images at the end, creating light source rigs from the panoramas. VFX Sups Mike Fink working with Frank Vitz would go on to apply improved IBL techniques in X-Men 2 (2003) for the Mystique transformations.

Here’s a Digital Domain breakdown reel for the Senator Kelly death (note lighting reference at .45sec):

David Prescott, DD’s visual effects supervisor on X-Men, explains the approach in detail:

We were sharing ideas with Paul Debevec about Image Based Lighting and how we could capture the data at Digital Domain. There were a few different people who were capturing gray balls and chrome ball data as reference but at that time there was a lot of varied ideas on how best to capture it on the day and how to apply it after.

[Production VFX supervisor] Mike Fink was shooting gray balls and chrome balls at our request when we were not on set. But the push to shoot them in every reference came from us. I took a gray ball and chrome ball up to Toronto with us and started capturing everything we could with it in the hope that we’d have the rest of how to apply it to a lighting setup fully by the time we wrapped shooting.

It was a challenge at the time to get the time on most sets to experiment with how to capture them so some were multiple exposures and some where single exposures. A lot of X-Men work was not really ideal for what we were capturing, the Statue of Liberty exteriors at night etc. But Senator Kelly’s death seemed ideal. On the day we had so many elements to capture with Mike Fink to piece the shot back together, the water bag, sprays etc.

The balls were shot a few different ways: multi-exposure, different angles and such. In the end, Sean Cunningham found an image set that seemed to best suit the setup on the day and built his setup from that. That shot was the first time Digital Domain had lit a shot using lighting gathered from a chrome ball, or any real HDR image based lighting. I think there was a commercial at DD that was released while we were working on X-Men that used it, too. So it was the first shot in a feature that we did.

And Sean Cunningham also reminisced with befores & afters about this work (and more):

I had seen Paul’s Campanile film like so many others and marveled at the possibilities of using the technique in the future. When I started R+D on this shot, as luck would have it, for the Senator Kelly shot, the VFX Sup had a large chrome sphere that he shot in the spot where the actor would be laying. It was a motion control shot with several passes (clean, with actor, with light probe, with a practical water splash gag, etc.).

I was also testing a closed beta of VEX Mantra, the first version of SESI’s renderer with programmable, Renderman-like shading. It was REYES-style micropolygons with optional raytracing, which was very exciting. And all our workstations were upgraded to dual-Xeon systems. So we finally had machines fast enough to put raytracing into production. I think if all three of those events had not been true we wouldn’t have taken the gamble.

What’s funny though is my shader lead (Simon O’Connor) and I discussed a similar technique, in Renderman, using 6-pack environment maps, for my Cab Chase + Leeloo Escapes flying traffic system. Indexing pre-convolved environment maps based on normal direction rather than reflection to compute a soft diffuse pass based on photographs taken on the stage of the miniature set + painting.

We were just so under the gun trying to make all the tweaks and handles for Mark Stetson and Luc Besson to take control of everything down to the scratches on an individual car that we just didn’t think we had enough R+D time to prove it would work for the whole sequence. It didn’t have a cool name at the time, but as Simon was describing how it would work I knew I wanted to try that out and I was actually experimenting with doing just that in Renderman before we shifted to VEX Mantra, while between projects, based on remembering what Simon and I had talked about and having seen Paul’s film.

That year Doug Roble asked me to present my technique at the technical sketch he chaired at Siggraph and Paul used the same materials as part of his panel on IBL across the hall, and then I also demonstrated the setup in the SESI booth to show off the new VEX Mantra.

It was a busy week. That was the same week that Marcos Fajardo made his big splash with Arnold and the “Pepe” films, and he sold a fork to Station-X for Project:Messiah. As much as I was proud of what I’d done I was pretty amazed, like everyone else, at what was seen in those Pepe films. I had to fake my soft shadows using a dome array of 256 shadow-mapped lights colored by the light probe images where he was doing real indirect.

Cut to just a couple years ago, I start getting all these messages from friends congratulating *me*, and I’m like what’s going on. Turns out Paul gave me a special thanks at his acceptance speech when SMPTE honored him. I was super touched that he even remembered this shot from so long ago given all the amazing places his research has gone since.

4. Before X-Men at DD

As David Prescott acknowledged, it turns out there was a project at Digital Domain carried out prior to the work on X-Men that utilized an HDR workflow inspired by Paul Debevec’s work. This was an Intel commercial called ‘Aliens’ overseen by director and visual effects supervisor Fred Raimondi. He shared the following with befores & afters, in relation to his use of a HDR workflow on the commercial:

I believe I was one of the first (if not the first) to use them in production. It was in a spot for Intel called “Aliens”. I don’t remember the dates, but once I saw Paul Debevec’s demo I couldn’t wait to try it. We didn’t even have a pipeline in place for doing HDRI.

We really didn’t have any tools for doing this and I believe that someone wrote a plug in for Lightwave (which was what we were using in commercials at the time) to create a lighting setup based on the HDR. I’m not sure if the software allowed for a piece of geometry to be used as an emission object for light, which is the way it’s mostly done now. I do remember that going forward, one of the frames from the commercial (I believe the one where the alien gets close to camera and you can see the whole lab reflected in his eye) was used as the cover photo for the DD internal website on how to do HDRI workflow. Good times. We’ve come lightyears since then.

5. More early uses of chrome/gray balls

Mike Fink, mentioned above in relation to X-Men, has also weighed in on his early use of chrome ball/gray balls:

The first time I used a chrome ball/gray ball on set was on Mars Attacks! in 1996. We used the tech in the desert Martian landing sequence to help with renders of the Martian saucer. But we didn’t shoot HDR images of the chrome ball, as I remember. Just individual stills.

We used chrome balls and grey balls consistently on the first X-Men. The Assistant Director, the wonderful Lee Cleary, would call out “Mr. Fink’s balls, please” at the end of every setup with VFX. We did shoot, when possible, HDR versions of chrome balls on set, but couldn’t make it a rule at that point.

For Kelly’s transformation to water, DD used the chrome ball images, and I think I remember that we derived HDR images from the single exposures of the chrome ball to create a global illumination environment that was then reflected in the water, and helped in lighting the transmogrification of Kelly into water. We were covering sets with still cameras to capture high resolution HDR images that could be turned into HDR environments for lighting, but also worked as backgrounds if needed.

All of this development was based on Paul Debevec’s work. He made it possible for us to integrate CG objects into photographed scenes with a much higher level of accuracy. Paul’s paper on “Rendering Synthetic Objects into Real Scenes” was presented at Siggraph in 1998, just a year before we were shooting X-Men. All of us were very excited about these developments.

So, yes, it was the first time that I remember planning and executing image based lighting tech. I can’t speak for other productions or supervisors, of course. There could have been something earlier than that, but it wasn’t until X2 that I used HDR images of sets and used chrome balls or fish-eye lenses and still cameras religiously. By 2006, I was using a six-camera cluster developed at R&H to capture HDR of every set as we shot. And I still covered us with chrome balls whenever we could.

6. After X-Men, and elsewhere

Paul Debevec mentioned to befores & afters that his research into HDR/IBL seemed to also be influential in the techniques that ILM developed for Pearl Harbor (2001):

They invited me in 1998 and again in 2000 to talk about HDR Image-Based Lighting in C Theater to their lighting staff, which helped lead to the ambient occlusion approximations they used to accelerate and precompute the lighting visibility computations for realistically simulating lighting on the CGI airplanes.

On this point, Ben Snow’s SIGGRAH 2010 ‘Physically-Based Shading Models in Film and Game Production’ course notes in which he explores ILM’s image-based lighting and physical shading over the years up until 2010, is a must-read.

The next big advance in using HDR IBL appears to have been for the Matrix sequels in 2003. Debevec, again:

They hired the second student to come out of my Berkeley group, H.P. Duiker, who had joined my team after the 1998 SIGGRAPH IBL paper to work as a technical director on Fiat Lux. As a result, they were able to spatialize the HDR lighting in the Burly Brawl courtyard in ways similar to how we spatialized the lighting inside St. Peter’s Basilica, so that the HDR lighting came from from the right locations in the scene rather than from infinite environments as is done sometimes. (The original HDR IBL paper explained that the lighting should be mapped onto an approximate model of the scene, and I don’t think ever mapped the lighting onto an infinite sphere.)

7. Where to learn more about chrome balls

Why visual effects artists love this shiny HDRI ball, from Vox

CAVE Academy course

CAVE Academy wiki notes

Finally, did we get it wrong? Is there a different film you think was the first to use a chrome ball? Let us know in the comments below. Thanks for listening!

Feature image courtesy of Xuan Prada.

I had the first gray and chrome sphere made for plate shooting on Star Trek: First Contact.

I was inspired by something I had seen Dennis Muren do on Casper. For every setup they shot a matte white sphere as a lighting reference.

I thought that was a really good idea, but felt that adding a chrome sphere would let you extract reflection environments and see more specifically how the set was lit. I also thought that a black shiny sphere would capture the overexposed peaks that would be blown out in the chrome sphere and better help you locate them. I talked this idea over with Joe Letteri who rightly pointed out that the white sphere would overexpose too easily and that a middle gray sphere would give me the same information with better range.

Thinking through how this would work on set (and how I would carry this to set) I decided that a single sphere with a matte gray side and a chrome side would be less unwieldy than three separate spheres, and if the single sphere could split apart into its component hemispheres and nested together, the whole thing could be pretty easily portable.

ILM’s model shop built me the first one using a blown plexiglass hemisphere from Tap Plastics, and a chromed steel wok purchased from a local cooking supply store.

These references proved really valuable to me in post and helped us do somewhat more accurate lighting.

My next show was Star Wars Episode 1, and I made shooting the gray and chrome sphere a standard part of every VFX setup. Dan Goldman wrote a nifty little tool that we used on Episode 1 that unwrapped an image of a chrome sphere into a latlong that we could use directly when rendering shiny objects.

All of the other ILM shows at the time took up the same practice, and the spheres became part of the standard location plate kit.

I suspect that PR images from Episode 1 or maybe word of mouth from ILM artists spread this idea across the industry. As you note, they’re now everywhere.

-John Knoll

Thank you so much, John, for commenting and clarifying this. (And apologies of course for my lack of research in pointing it out, initially). Your work clearly was game-changing. And I have always been inspired by how these chrome/gray balls get used in the VFX workflow. Best, Ian

The Phantasm Menace