Where it came from, how it was built, and why it was originally called ‘Smart Paint’.

When Foundry introduced Smart Vectors in Nuke in 2016, it was as if the company had brought a brand new kind of magic to its compositing software. Smart Vectors gave artists the ability to track images onto complex moving surfaces, doing so by generating motion vectors to push or warp an input image sequence across a range of frames.

You may have seen the result of Smart Vectors in VFX shots such as blood spatter and wounds or in tattoo additions and removal. But where did the Smart Vector toolset – once called Smart Paint – hail from? How was it developed? And how does it form part of Nuke now? Take a look back with the Foundry team to find out.

The origins of Smart Vectors

The original idea for Smart Vectors came from Foundry’s former head of research, Jon Starck. As current head of research, Dan Ring, recounts, “Jon noticed that a lot of the ‘track & paint’ workflows for applying paint fixes were too rigid and didn’t capture local deformations like skin or clothes particularly well. From this he had been playing with the idea of using motion vectors to push paint fixes through a shot.”

Foundry’s customers had already been experimented with similar things. However, notes Ring, “typically the quality of the vectors was so poor that nothing could be pushed more than a handful of frames – about 5 to 7 – before turning into a distorted ‘soup’.”

“It was when the work on improved disparity vectors for Ocula 4.0 was back-ported to NukeX’s motion vectors did we see a significant enough jump in quality to push paint fixes much further through a shot,” continues Ring. “Well, about 20 frames at that time. This is where I joined, to figure out what was stopping us from pushing further and to see if we could make a tool out of it.”

What Ring identified as one of the major challenges in implementing Smart Vectors was in keeping the quality of the warp as high as possible after multiple successive filters. “If you’re familiar with applying one spatial transform after another, you’ll know that if you don’t take care of how you apply the transforms, you’ll end up with a ‘softer’ image than you want. When applying vectors, each frame means a new warp, so if you’re warping over a sequence, either the warp or the image will get too soft very quickly.”

The solution to this problem came from using ST-maps. Foundry had identified that some artists, such as Austin Meyers, were already using them to create “gnarly transform workflows in Nuke,” identifies Ring. “The benefit of using ST-maps is that you can transform them all day long, and it’s only when you want to apply the final transform of the ST-map that you pay the price of a single filter hit.”

For Ring, then, ST-maps were the next Eureka moment in the development of Smart Vectors. They prevented the warp getting ‘soft’ over longer sequences and let artists push fixes roughly 40 to 50 frames. Filtering would also ultimately play a key role in pushing fixes further in a shot.

Of course, several people in addition to Starck and Ring at Foundry were ultimately responsible for the eventual R&D and implementation of the Smart Vector toolset. Among those was Ben Kent, who had also been part of the team awarded an Academy Scientific and Technical Award for Foundry’s Furnace tools.

First, Smart Paint

As development of the Smart Vectors continued, Foundry released its first alpha: a tool it called ‘Smart Paint’ at the time. “Initially,” relates Ring, “this was targeting fixes made in the RotoPaint node to help with sequence painting workflows, particularly for digital beauty work. It was really clunky, it had a spreadsheet-style interface for creating keyframes for each keyframe and paint stroke you wanted to pull from RotoPaint. But when you saw a paint stroke ‘stick’ to the face of someone in the image for the first time, it was really satisfying.”

In fact, the tool was to be originally called ‘PaintPush’, and was written entirely in Python around the end of 2014 / beginning of 2015. It would later be built into a native Nuke plug-in and renamed ‘SmartPaint’ around April 2015. Says Ring: “When we’d realized that it could be useful for any fix or comp trick, we renamed it to ‘Smart Vector’ to remove the ‘paint’ focus and highlight that it was powered by motion vectors.”

“However,” adds Ring, “the ‘Smart’ part of the name was an inside joke and only meant to be a temporary name; the idea that if this node is ‘smart’ then by comparison every other node in Nuke is ‘dumb’. But by the time of release the name had stuck, and it remains the ‘smart’-est node on the menu! Thankfully we have more responsible people looking after node names now.”

Making Smart Vectors a production tool

With the early development done, work progressed on turning Smart Vectors into a robust tool. The toughest, but most important part, of that was making sure it worked at a reasonable speed for artists.

“That was the main reason for having to bake out all of the smart vectors in advance,” explains Ring. “The side effects of this were that it didn’t work on the render farm or frame server, so artists’ boxes were being tied up, baking out huge files to disk. After that, all of the warping had to be done on the CPU which also really chugged if you didn’t use it in exactly the right way. In addition to that the first versions of Smart Vector required some serious hacking of Nuke’s meta-data system to ensure we could pull the hundreds of baked-out vectors for all of the frames we needed. Thankfully this has since been replaced with a better system.”

Another tricky task in getting Smart Vectors production-ready was figuring out what to do for slow moving shots, such as where you might have a slow moving zoom happening over 200 frames. Even though the movement might be slow, the image and vectors would still start turning ‘soup-y’ after a few frames, in early development.

The trick to fixing that issue, describes Ring, “was adding the ‘frame distance’ knob, which allowed vectors to be computed between distant frames, i.e. instead of computing between frames 1 & 2, then 2 & 3 etc., it would compute between frames 1 & 3, then 3 & 5 for a distance of ‘2’, or 1 & 9 then 9 & 17 for a distance of ‘8’. This meant that you could warp or ‘jump’ further with fewer filter hits, and less chance of ‘soup’.”

“The problem then is if you’re ‘jumping’ from 1 to 9, then, 9 to 17, how do you get from frame 1 to say frame 12? Do you warp forward from frame 9 to 10 to 11 to 12? Or do you go from 9 to 17 and backwards to 12? Or do you do both and blend the result? Or worse, do you compute all of the paths from 1 to 12, then somehow blend them?”

Ring started re-thinking this as a graph problem in order to solve it, with frames as the ‘nodes’ and the ‘edges’ being the vectors that could jump artists between a pair of frames. “The idea,” he says, “was that there exists some optimal way of getting from one frame to another while minimizing the total number of warps, while also ensuring it was temporally consistent. I ended up spending months on this, filling several notebooks with thousands of test graphs, examples and counterexamples trying to figure this out.”

Ring adds, “I wanted to get a picture of some graphs of these but when I asked my wife where they were, she said she’d thrown out ‘that madness’. The answer turned out to be something elegant but also embarrassingly obvious.”

Where you’ve seen Smart Vectors in action

VFX studios have adopted Smart Vectors in a many innovative ways, using the toolset to help with large-scale visual effects shots as well as plenty of seamless invisible effects.

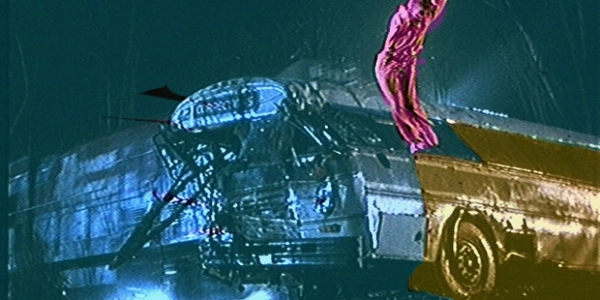

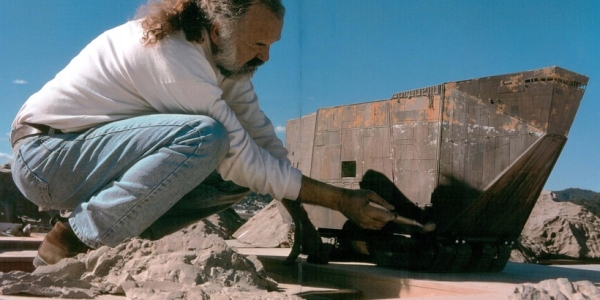

MPC has relied on a Smart Vector workflow in recent years on a number of ‘digital make-up’ projects. For example, on The Mummy, the toolset allowed artists to track CG tattoos and insert ‘mummy-like’ wounds on actress Sofia Boutella’s face and body. ILM employed Smart Vectors to implement extra milk dribble on Luke Skywalker’s beard – a complicated surface – in The Last Jedi. The studio also utilized the toolset for the infected eye effects in Birdbox.

Union VFX had a number of blood and wound shots on Cold Pursuit where Smart Vectors came into play, including a scene where a character is shot against a number of wedding dresses, and slides down them. The resulting blood spatter on the intricate white fabric was tracked onto the dresses with the aid of the Smart Vector toolset.

Logan, similarly, had many wounds and blood hits, and here Image Engine’s matte painting team came on board to use Smart Vectors for this work, something that may normally have been purely the domain of compositors. Another film to take advantage of the Smart Vector toolset was Victor Perez’s short Echo (you can see more in the webinar, above).

Smart Vectors regularly finds usage in commercials, too, especially for tight turnarounds. For a ‘Unicef – End Violence’ TVC, BlindPig and Absolute Post realized animated tattoos over David Beckham’s body with the tool.

Continual development

Since Nuke 10, Foundry has been iterating on the Smart Vectors toolset, thanks to user feedback. “From Nuke 11.2,” details Ring, “we’ve made the Smart Vector nodes GPU enabled so they run way quicker. Originally you needed to pre-render your Smart Vectors before using them, but now you can generate them on the fly in a script. The VectorDistort node also has an advanced internal caching mechanism that vastly speeds up processing if you play through the sequence linearly.”

Other improvements include in the areas of image filtering and the removal of the “hacky meta-data system it relied on,” comments Ring. “In Nuke 11 we added a control to ‘blur’ the ST-map. This allows some of the micro-distortions to be filtered, recovering a lot of the larger motion.”

Nuke 11 is also where users were given the ability to render on the farm, something Foundry had to tweak Smart Vectors to accommodate. “Earlier Smart Vector generation relied on keeping internal knowledge of a ‘root’ frame, like a hardcoded reference point from which we could walk the graph,” advises Ring. “Unfortunately rendering on the farm meant you couldn’t share that root frame properly, and so the vectors would be created out of order. As part of cleaning up the meta-data transport we were able to remove all hard-coded values, and now infer them on the fly, which also means that if Smart Vectors were prepended or appended to an existing clip they would still work.”

A new feature added to Smart Vectors in Nuke 11.2 was Matte Input, which allows artists to mark a region and ignore its effect on the Smart Vectors. “This is great when you want to place something on the background and you have a foreground object obscuring it, for example an arm moving across a body that you’ve placed a tattoo on,” outlines Ring. “Previously the arm would ‘drag’ the tattoo off the body, but now it all behaves more sensibly.”

Smart Vectors today

In recent times Foundry has expanded the tools that use Smart Vectors. Right now, the core tool to use Smart Vectors is Vector Distort, which uses the underlying motion described by the Smart Vectors to distort the pixels in an image. Essentially it drives the pixels in one image by the movement in another.

“Even with the algorithmic improvements, under complex motion, Smart Vector warps using Vector Distort can still start to drift or turn ‘soupy’ after a while,” acknowledges Ring. “To solve that we added the VectorCornerPin node in Nuke 11.3. Vector Corner Pin allows a user to constrain the output of the warp by adding ‘user’ key frames on a corner pin. At those keyframes, the warped output will always obey the corner pin transform, but between them the Smart Vectors take over and control the warp. Put another way, it’s like a CornerPin node where the intermediate frames aren’t linearly interpolated, but controlled by the underlying image motion.”

“We also added Grid Warp Tracker in Nuke 12,” says Ring. “Previously, driving a Grid Warp with the underlying image motion involved manually tracking the image and then attaching those tracks to each and every grid control point. This process was both time consuming and unreliable if the grid didn’t happen to lie on a trackable pixel. Grid Warp Tracker allows a much faster and more robust solution by allowing the user to drive the grid with Smart Vectors – all you have to do is plug in your Smart Vector input and hit track!”

You can find out more about Foundry’s Nuke, including how to download a trial, here.

Brought to you by Foundry:

This article is part of the befores & afters VFX Insight series. If you’d like to promote your VFX/animation/CG tech or service, you can find out more about the VFX Insight series right here.

I remember having that conversation with Dan. Foundry had been sending some alpha SmartPaint nodes, and Dan happened to be stateside and came through my office. We were talking vectors, and I was like “Hey let me show you this ST-warp rig we’re using a ton, this is what I want, but with vectors” and I saw the lightbulb go on over Dan’s head, a week or 2 later he sent me the first iteration of the vector distort.

Thanks for sharing this memory, Austin!