How a call to help deliver the biggest film in the world changed everything for Marcus Nordenstam.

It was about a decade ago that a new fluid simulation tool burst into the consciousness of 3D artists worldwide. This was Naiad, from Exotic Matter, and it quickly became a much sought-after solution for large-scale water sims, gaining significant attention through its use by Weta Digital in the 2009 blockbuster Avatar.

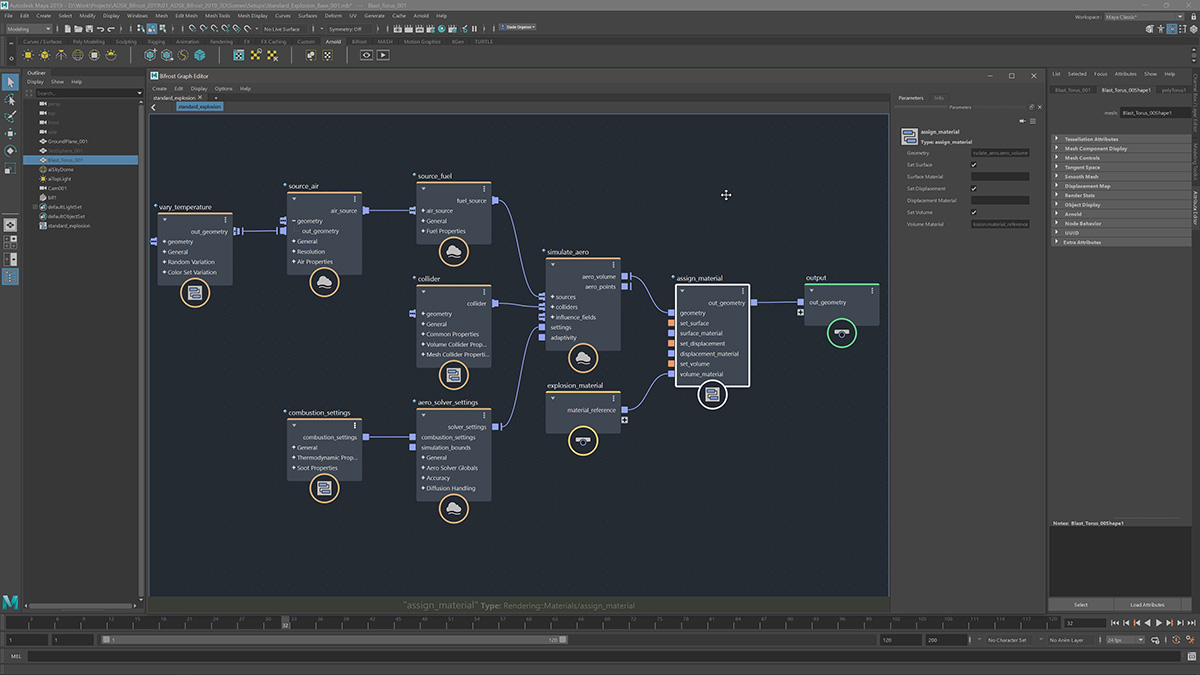

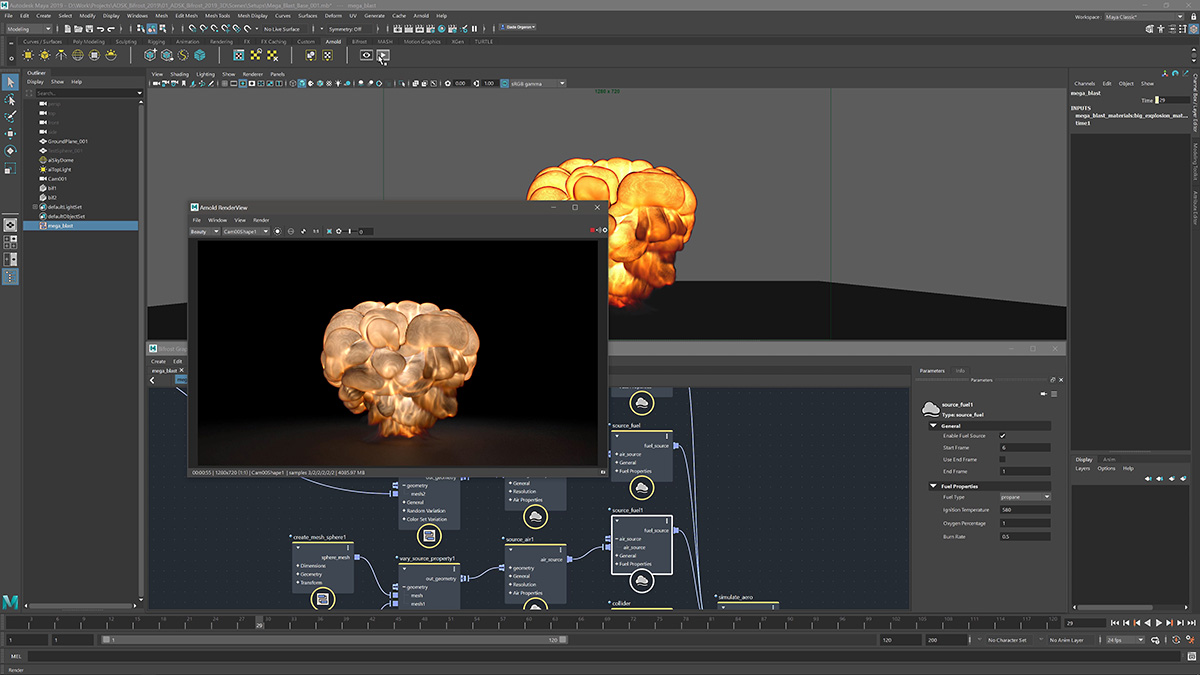

Then, in 2012, Exotic Matter was acquired by Autodesk and Naiad became Bifrost within Maya. While a version of the fluid simulator has been available since Maya 2015, the true potential of Bifrost as a visual programming language was only released at SIGGRAPH this year.

It’s been a long road for the toolset, and the team, so I decided to ask Exotic Matter co-founder and now a senior product manager at Autodesk, Marcus Nordenstam, about his Bifrost journey, starting with Avatar. We also talked about why the new Bifrost has taken some time to be released, the influence of Softimage ICE, and what’s next in terms of future development and releases.

b&a: You ended up going down to Weta Digital to help with Avatar. How did that come about?

Marcus Nordenstam: I had just left DNEG where Robert Bridson and I had built that first FLIP (fluid-implicit particle) solver – at least, that we were aware of in a commercial setting as an in-house tool. We started the company Exotic Matter in a small town in southern Sweden. I think it was about three months into it, we had just gotten the hydrostatic pressure tests working, which is where, from a simulation and a numerical point of view, you put water in a glass and you make sure it doesn’t lose volume, grow, or expand due to the wrong fluid pressures being computed.

We’d gotten to that point, so we figured our implementation of the Navier-Stokes equations was working. We were fairly confident that the solver was up and running in a very basic way, but at that time the UX was black and white. I hadn’t had time to put colors on the nodes or anything! Out of the blue, Joe Letteri’s team at Weta Digital called me up and explained that for the past couple of years, they’d been trying various commercial software and in-house projects and all kinds of mixes of things, but that they were essentially facing a bit of a water crisis on this Avatar movie.

They had hired Sebastian Marino, who was an R&D guy I used to work with at ILM. He was one of the two or three people that I’d told that I had gone to Sweden to start this new fluid solver project. Miraculously, Weta Digital hires the one guy who knows what I was doing when they needed a water solver.

The decision was made to go down there, even though Naiad was in a very alpha state. I figured if I was there in person, at least I could fix things if they broke. We felt confident enough that we’d get it to work and that it wasn’t going to blow up in our face and destroy our PR ability for an unproven product in the industry.

b&a: Avatar was released in December 2009 – when did you head down to Wellington?

Marcus Nordenstam: It was about a year before the movie came out. So, you can imagine the pressure on the production – it wasn’t very long really until release. I got there and one of the canonical tests that they just had not been able to get to work was the river scene where Jake Sully falls in. He’s being chased by that panther-like thing and jumps into the waterfall and then comes out of the river. And we have these close camera shots of him bobbing up and down in the water with the camera slicing the waterline. It’s the worst-case scenario because of the amount of resolution you need that close up with the water simulation. At the same time, you see the whole river, so you’ve got that scale working against you. No wonder they thought that was hard, right? It was a huge risk from a simulation point of view.

We got there, and we loaded the riverbed geometry into Naiad. If I remember correctly, we did that on day one. By day two or three, we had flooded their entire river bed. Jake was in there splashing around. It was just a WOW moment. For the people that had been tearing their hair out and wondering, ‘How is this going to possibly ever work?’, it was quite a day. I remember we went to the pub in Wellington to celebrate.

b&a: What do you think it was in Naiad back then that made that river shot work?

Marcus Nordenstam: It was the fact Naiad used the FLIP numerical method. Using it on that shot showed what that method could do. The common method in use at that time – before FLIP – was SPH (smoothed-particle hydrodynamics). SPH has two big things going against it that prevents it from scaling: one is that it has to do all this neighbor-finding-neighbors work so that it can resolve the pressures in between the particles, and that can be very expensive. There are ways of accelerating that, but it’s still going to turn into some kind of closest-point-search algorithm. And then there’s the stability restrictions that you place on the methods. In other words, the faster objects move, the amount of little time steps you’ve got to slice the simulation methods into goes up quite a bit. It’s just the worst-case scenario from an algorithm point of view when doing big water simulations with high detail.

Weta Digital had tried that and it was a non-starter. Then there were the more advanced R&D-type methods, like PLS (particle level set methods). That would have worked, but there was no product that could do that at the time, it only really existed in PhysBAM which is what ILM was using.

From the beginning, we had chosen to use FLIP because we felt like that was the next-gen solver tech. PLS is a lot more advanced in some ways, too. The way that works is you have to track all these particles at the surface of the water to make sure you don’t lose volume when the voxels evaporate based on these advection algorithms.

At the time, there were really only two methods that could scale to that type of simulation problem: one was PLS, and the other one was this new idea around FLIP, which we had validated worked in production at DNEG. The whole world caught on to FLIP after that, and I think that Avatar showcased what FLIP could do.

b&a: So what was the outcome of people seeing this great work in Avatar. People actually did seem to know about Naiad a little by the time it came out, but do you remember what the reaction was specifically after Avatar?

Marcus Nordenstam: Part of it was this new FLIP solver in Naiad, and part of it was the amazing artistry. I mean, if we had just taken that raw simulation data from Naiad and handed it off to any old artist at any old studio, the results would have varied a lot. There were a lot of other things that had to happen to dress up the shots and make them look great. FLIP basically made it possible for the good artists at Weta Digital to shine. Without the FLIP solver, there would have been no chance. There was no foundation for them to do the shot. The stuff you see in that movie, in the end, a lot of that is just very talented artists doing stuff on top of our cool data.

The reaction was: people were astounded, and I was flooded with emails. There’s a guy – Igor Zanic – he did a lot of the early Naiad tests that were released on the web. He was like, ‘Give me this thing.’ I didn’t know who he was, and we sent him a beta version and then two days later, he’d done sharks jumping or a flooded city. All with no documentation and just the black and white interface – really crude stuff.

I think there were people in the industry who were just chomping at the bit. They were fed up with never being able to do these kinds of cool water sims that they would see from ILM. Naiad really spoke to them. It really said, ‘Hey you, do you have aspirational visions for doing blockbuster effects? Guess what? This tool actually will let you do it!’ There was a lot of excitement and empowerment.

b&a: There was this excitement and people were doing shots with it, but what were the challenges for you as a small software company in getting Naiad out there and delivering it?

Marcus Nordenstam: The classic thing happened: we signed with most of the big studios, even studios that had in-house R&D, which meant that we were beginning to become profitable as a little company. As we grew, the support burden increased and a lot of things like that. I started looking at various options going forward to increase revenue streams, hire support people, and continue to build up the company, but I was also keenly aware of the fact that we were always destined to be this niche product.

Both me and Robert really believed that there was a lot more than fluids in store for Naiad. After a couple of years, when we started talking to various companies about licensing our tech, the resounding response was ‘no, we don’t want to license your tech, we’d rather just acquire you’. I was like, wow -I hadn’t really thought about that.

Eventually, when Autodesk came along and we talked to them, it was pretty clear that we could continue alone and always be a niche player, or we could really get the opportunity to try to impact the whole industry in a far more fundamental way than we probably could have done in the same amount of time had we just struggled on.

b&a: What were some of the big challenges you had, after the acquisition, in integrating the packages?

Marcus Nordenstam: For me personally, I had been very involved in the development – the architecture, the design, everything – of Naiad. I wrote probably 75% or 80% of all the code of Naiad, so it was a pretty intense engineering effort. And not to take anything away from Robert – he wrote all the clever bits.

Coming from that kind of entrepreneurial situation and being a heavy-duty code and design type of person, I think it was hard for me when we started Bifrost. The vision was clear from the beginning: we had to build a much more open system than Naiad was. It did have nodes, but you couldn’t go inside the nodes. We didn’t really even have scripting nodes and there was nothing like visual programming in it. Despite that, it was quite effective for what it did.

We knew from the beginning with Bifrost that we wanted to have an open graph with visual programming where you could go into the nodes and change them. We also knew we wanted to leverage things like LLVM for compilation. The theory was always that that type of technology could help make what amounts to scripting and node-based workflows actually run at the same speed as compiled code. I learned later (during SIGGRAPH 2014) that Fabric Engine had been using LLVM for similar purposes since 2010 – so credit goes to them being the true LLVM pioneers.

A lot of those visionary things, a lot of those goals were clear for us. The hard part for me was learning how to work with a big team of developers that I’d never worked with before. Understanding how they communicated – everything from schedules to approaches and meetings. Because of my entrepreneurial background, I was used to working out those technical details myself and I always found myself going into a situation where I would say, well, if I was going to do it, I would do it like ‘this.’ In retrospect, I realize those were actually some of the years where I grew the most in my future leadership capabilities.

b&a: What about the technical challenges, especially in terms of implementing the tech in Maya?

Marcus Nordenstam: One of the technologies that we wanted to embark on right away was LLVM and use that as the compiler architecture. The thought was that we want to have the ease of use of scripting and visual programming and nodes, but that shouldn’t mean that you have to compromise, as an artist or user, in the performance of the tools or systems you build. The belief was that LLVM could be that solution. We’ve stuck to that, although we’ve evolved with that technology. LLVM essentially takes this thing called IR, which is intermediate representation, it’s this low-level language that operates regardless of whatever the actual syntax of the actual programming language is. If you’re writing in C++, it would turn that into IR, or if you’re writing Python, you would turn that into IR. In our case, it was the visual programming graph that gets turned into IR.

The technology we had to start with was really just more or less a system that takes this IR representation, JIT-compiles it and creates executable code. (JIT-compilation is a way of executing code that involves compilation during the execution of a program – at run-time – rather than prior to its execution. In practice, it provides some of the ease-of-use of a scripting language, while not sacrificing the speed of execution by much, or at all).

That’s great because that’s non-trivial stuff, but we still had to build the front-end to that; the thing that takes the visual programming graph and turns that into the consumable LLVM / IR. There was a lot of R&D there, which was one of the reasons why we didn’t release the open graph in Bifrost until July of this year. It needed to be more artist-friendly. What we did have was a good FLIP solver, which had been updated and improved on since Naiad; we released this as Bifrost Fluids with Maya 2015.

Then, we re-did the front-end and a lot of the technology that sits on top of the LLVM to produce what we have now – Bifrost for Maya. It runs very fast, as people I’m sure are finding out.

With Bifrost, we weren’t just building an artist tool – we were building a new programming language (that happened to be node-based), a compiler toolchain that compiles that language, and – at the same time! – production-ready, customer-facing features and workflows on top of all that. The scope of that, for anyone that’s ever tried it, is, well, enormous. That’s why it took a long time. One cannot compare the engineering and product design effort of that with, say, writing new C++ nodes that implement new features in an existing node-based environment.

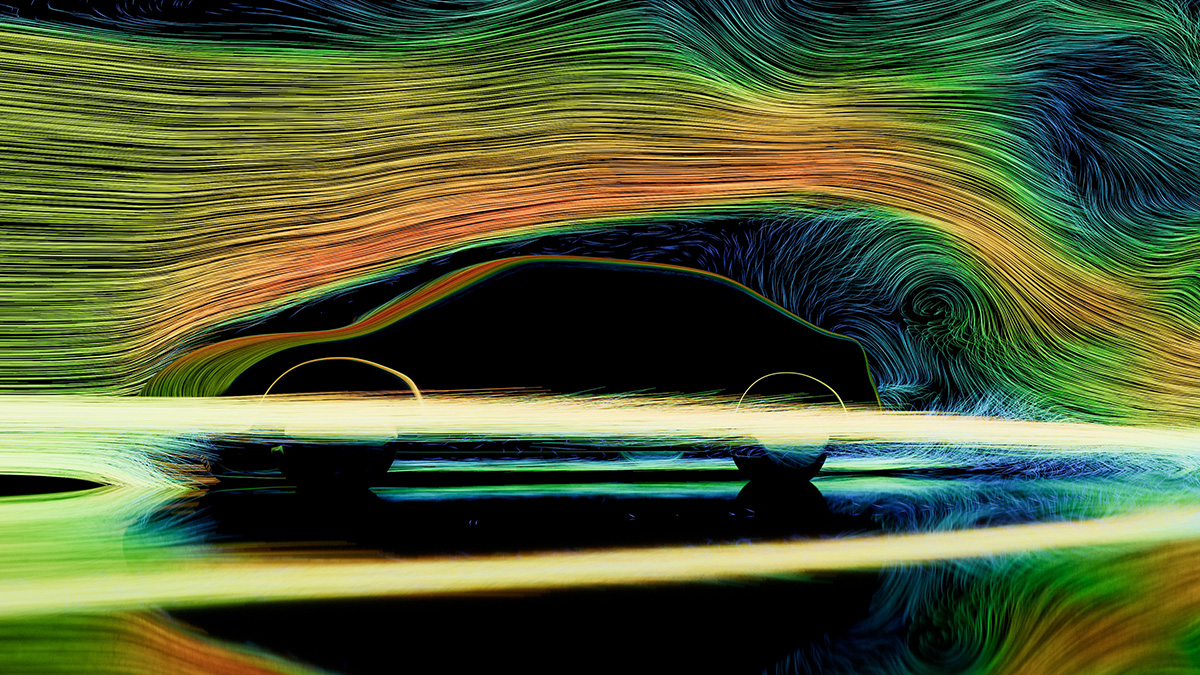

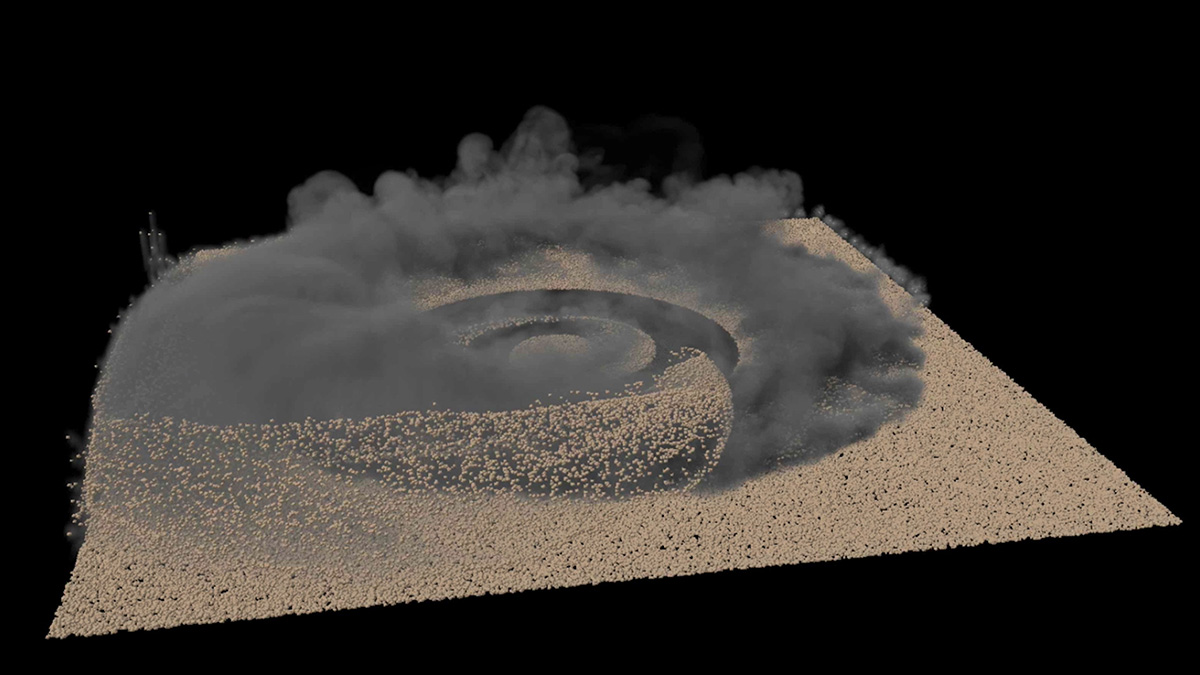

b&a: Naiad was obviously a fluid simulator. You’ve mentioned this a little already, but what is it about Bifrost that now allows it to do more than that?

Marcus Nordenstam: Part of the vision for Bifrost was that it would be a visual programming environment for 3D graphics. That’s what it was always intended to be. Although we only got the liquid solver going in the beginning, that didn’t change where we were going. We went a little bit dark for a period of time because we were working hard on realizing that vision.

As we worked towards that vision and it became real, we were really excited to deliver something that really is visual programming for 3D graphics, very much in the spirit of Softimage ICE.

To answer your question, what is it about Bifrost that now allows you to do all these other things apart from fluids? Well, the solvers are just nodes in the graph and the data model that all these solvers operate on is very open. For example, I’ve used visual programming in Bifrost to add vertices of a cube, and then add connectivity to connect up all the faces, and thereby have created a node in Bifrost that creates a cube.

Right now, I’m also working on one that creates lightning. You can have some spheres and it’ll use that to guide the path as lightning strikes. As people begin to get into Bifrost and take on visual programming, they will find that they can do almost anything that has to do with content creation for 3D graphics. It’s just a matter of how motivated you are to get into visual programming.

One of the developers that we hired recently, Jonah Friedman, who is now the product owner of the visual programming aspect of Bifrost, has a strong Softimage ICE background. One of the things that he’s talked about a lot – which I think is really true of Bifrost – is that Softimage ICE elevated artists.

It worked almost as a career developer. Artists who were not technical at all started realizing that, ‘Hey, I’m looking at these graphs that others are making, and I need to tweak them.’

And it would set them on this slow, gentle on-ramp to become a technical artist without ever really aspiring to be one. It would elevate these people, and they would become these superstars where they were like ninjas of CG. That’s why ICE had such a following: because it had that transformative effect on artists. It would deepen their respect for the art, for their discipline.

That effect that ICE had with artists is one of our key goals with Bifrost. What ICE did to Softimage and what it did to artists, we’re really hoping Bifrost will do to Maya and to Max, and perhaps to other places and artists.

b&a: Before you released the graph and visual programming side of Bifrost this year, what kind of campaign or program did you have to get it out there in terms of beta testing?

Marcus Nordenstam: In the last year, we opened up the beta for Bifrost. We had 30 or 40 artists on that beta and that grew to a 100, just as we launched this past SIGGRAPH. Some of the most prolific beta testers include people like Maxime Jeanmougin – he’s an ex-Fabric Engine user who did a lot of rigging and deformers tests, created eyelid deformers and that type of thing with Bifrost.

Another artist is Bruce Lee, whose real name is obviously not Bruce Lee, but that’s what he goes by in the internet world. He’s done some really amazing MPM (material point method) simulations, like a snow leopard walking through snow.

Then there’s Duncan Rudd, who has created a really cool bezier deformer for tendrils that could be used for octopus tentacles. There’s a lot of excitement around the potential to use Bifrost to build what I call ‘hybrid’ rigs – a rig in Maya where some of the nodes that define the rig are regular DG (Dependency Graph) nodes like you would use today, but also mixed in there are Bifrost graphs, which show up as regular DG nodes from Maya’s point of view. So, you can start building rigs that are partly Bifrost and partly Maya.

b&a: Online, people are wondering if there’s going to be a 3ds Max version of Bifrost. Are you able to say what is coming next?

Marcus Nordenstam: We have publicly announced the presence of a Bifrost beta for 3ds Max. That does exist. The project is real and the 3ds Max team is working on it every day.

While I can’t comment specifically on what’s coming down the line, a big focus for my team is performing. We’re looking at eliminating blockers one by one. Once there are no obvious blockers for almost anything you could imagine doing, you may see some more complete higher-level workflows appear that touch more than just FX.

Find out more about the release of Bifrost in this Maya blog post, and look out for a whole bunch of Bifrost-related postings at befores & afters in this special series.

Sponsored by Autodesk:

This is a sponsored article and part of the befores & afters VFX Insight series. If you’d like to promote your VFX/animation/CG tech or service, you can find out more about the VFX Insight series right here.

Naiad was amazing – the sparse voxel workflow was a game-changer. But Christ it is painful to see so much effort being put into creating what is essentially Houdini or ICE, ten-fifteen years after those tools and visual programming workflows were invented.