It changed HUDs forever – members of the original Iron Man HUD design group look back on the work

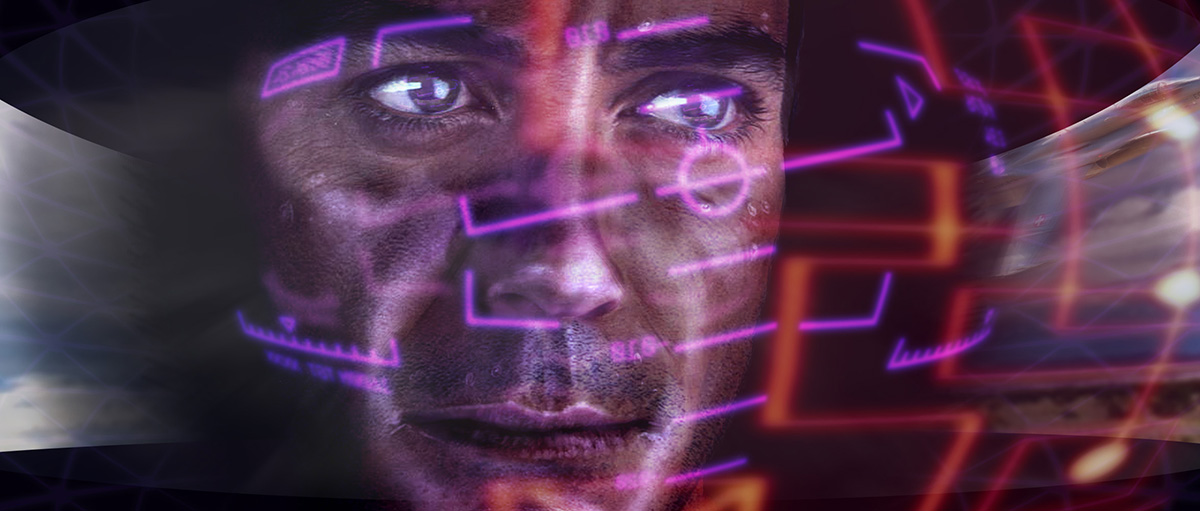

It’s ten years since the world got the first taste of Robert Downey Jr. as Iron Man, and one thing that is certainly still in the mind of many audience members is the hero’s heads-up display, or HUD. The HUD is seen inside the helmet of the different ‘Marks’ of the Iron Man suits and in the Iron Monger suit worn by Jeff Bridges’ villain Obadiah Stane, and of course in subsequent Iron Man and Avengers outings. Some might say, too, that the original designs from the 2008 Jon Favreau film influenced many HUDs in other different movies.

While the creation of the original HUD in Iron Man certainly paved the way for future designs, the task would prove to be incredibly challenging for the film’s visual effects team. What should it look like? How would it work? How would it be created? Initial positive results by visual effects studio The Orphanage hit a stumbling block part-way through post-production, and a decision to adopt a new compositing system also required a steep learning curve. But the final results speak for themselves.

On the tenth anniversary of Iron Man and with the release of Avengers: Infinity War, I asked several members of that original HUD creation group how they made a visual effect for the ages.

Will a HUD work? Will Iron Man work?

John Nelson (visual effects supervisor, Iron Man): The key question was, how do you get inside the helmet but still have this virtual environment that feels organic? We were trying to get an organic conversation going between his A.I. assistant J.A.R.V.I.S. [Paul Bettany] and Tony Stark [Downey Jr.]. But no one really knew what that meant.

Wesley Sewell (additional visual effects supervisor, Iron Man): A lot of things about Iron Man were a very big unknown back then. People weren’t sure the movie itself was going to work, and who really was going to care about this sub-Marvel character named ‘Iron Man’? But obviously it’s now legend. One of the big concerns that the studio was having was, what’s that going to look like when you go inside the mask? What’s it going to look like when you get into the HUD, and can you actually believe it?

John Nelson: We had some ideas. Maybe Robert Downey Jr. would look left, we’d bring something in left, he’d go right, we’d bring a graphic in right. The camera would swing around, we’d bring another graphic that would swing around like that while he’s talking about it as if he’s directing it verbally. But these were early ideas.

Kent Seki (visualisation/HUD effects supervisor, Pixel Liberation Front): The HUD shots of Robert Downey Jr. were a risky proposition at that time, when you consider them on face value, no pun intended. Their success in the film required the audience to take a leap of faith that as the action unfolded, you could cut to these ‘high-concept’ shots of Tony’s face inside the suit. When you stop and think about it, the actual camera that shoots Tony exists inside the mask, but allows these graphics which really only appear in the eye-piece of the helmet to be projected in 3D space around his face. It was essentially, an impossible shot.

Wesley Sewell: There was a real concern that it wouldn’t work. Seriously, people were very worried. John Nelson and I had many conversations, like, is this going to play? And we didn’t know. So you put on a mask, and the mask is right up against your face, and then you’re going to cut inside of it and show you the face of Tony Stark inside of it with these three dimensional objects floating in front of it. We didn’t know if it would work in terms of, would the audience believe it. We were very unsure.

Kent Seki: In fact, we considered the shots of Tony’s face to be a composite ‘stitch’ of several small camera views inside the mask that create a virtual representation of Tony while yet another representation of the graphics are composited on top of that view. Of course, in the end, the audience just bought the concept.

So, what should the HUD look like?

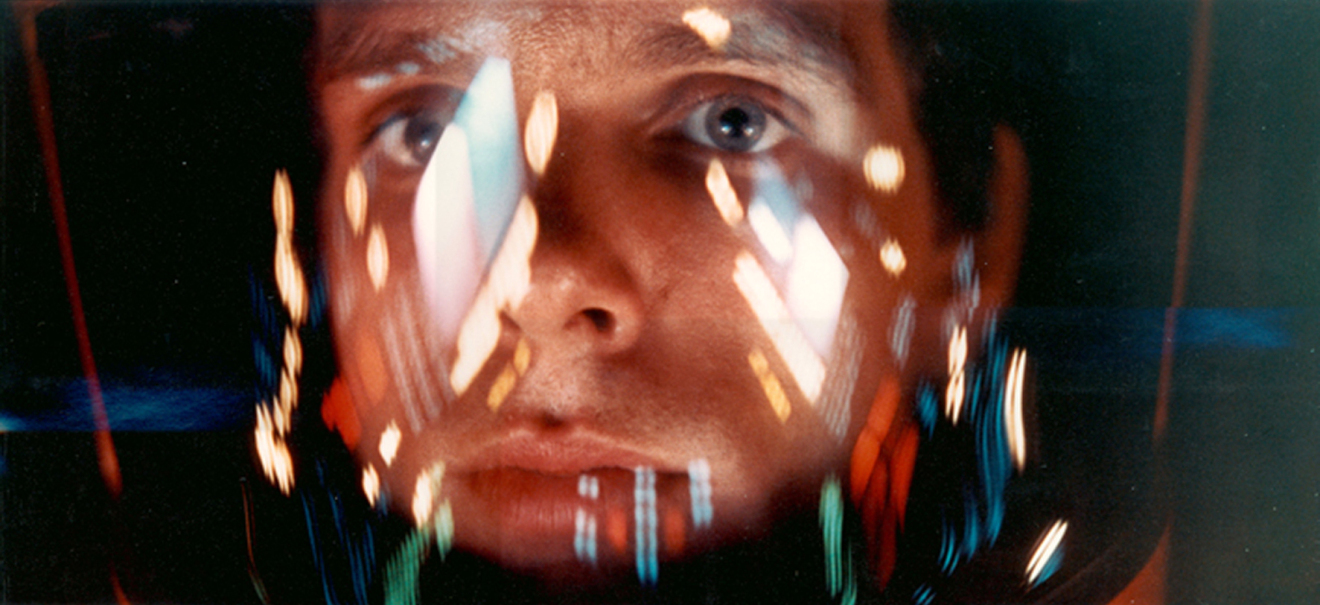

Kent Seki: On one of my first days on the show, I walked into John Nelson’s office. He had a screen grab from 2001: A Space Odyssey pinned to the wall. It was the iconic close-up shot of Dave in the slit-scan sequence. Dave had his helmet on, reflecting the graphics that he was seeing. When I asked him about it, John got very excited and began to talk about these shots of Tony’s face with his UI graphics surrounding him that were going to be used when Tony was in the suit to allow the audience to connect with him. I thought to myself, ‘Those are going to be some of the coolest shots in the film!’

Jonathan Rothbart (visual effects supervisor, The Orphanage): John Nelson had also shown me an image from 2001, where you just saw the reflection of numbers on Dave’s face from when he was going through the computer bank. He said, ‘The problem we have is that Robert Downey Jr. needs to be covered with a helmet the whole time but we don’t want our star to be covered with a helmet through the whole movie.’ That was about as much as we had to go off of at the time, in terms of how to handle it.

Kent Seki: John Nelson always saw the suit and its accompanying technology as an extension of Tony. In this way, Tony’s technological evolution reflected his own evolution as a scientist, inventor, and ultimately as a character in the larger story. With this in mind, John said there were three things the HUDs need to do:

1. They needed to be driven by Robert’s performance. You had to remember that it was all about Robert. It’s his face. It’s about what he was doing. He had to drive it. You could obscure the performance with the graphics sometimes, but at the same time you had to let the performance drive where the graphics go.

2. You needed to let the Z-access space really work because that’s what made the HUD shots successful. That is, there was this notion that there was much more beyond the camera, but because we were so intimate, you couldn’t see those things. The implied Z-space depth behind the camera was just as important as what the audience could see.

3. You should have an alpha event happen in each of the shots. What he meant by that was there needs to be a story point that you hit home with a graphic. For example, in one shot, Robert would say (in regards to his power level), ‘Leave it up on the screen.’ Then, instead of appearing and disappearing, the power meter stayed up on the screen. Most alpha events weren’t noticeable or even obvious; however, they reinforced the subtext of the moment or the important plot point.

Testing times

Jonathan Rothbart: Back then, everybody wanted to be on Iron Man. And so, every VFX facility that wanted to get Iron Man had to do a test to get on the show. We did a test for the HUD, and for shots of Iron Man in the suit and actually, the moment in the film where he blows up the tank, that was actually from our test.

Dav Rauch (HUD design supervisor, The Orphanage): Every now and then what would happen is we’d get a request from a client to do a test. Essentially what I was was like a sequence creative art director, and since I came from a design background, I tended to get put on projects where there was this kind of look development aspect that wasn’t totally visual effects-oriented. They had some greenscreen footage that they gave us and they said basically, ‘We want to see what you would do with it.’ It wasn’t Robert Downey Jr., it was a stand-in. It was just his head on a screen and he was looking around. I put together a few really simple tests in After Effects, just pretty fast.

Kent Seki: Pixel Liberation Front (PLF) provided two early tests during the bidding process for final HUD shots. I created the test of the Close Up (HUD) shots and PLF Artist Andy Jones created the test for the Point of View (POV) shots. For the HUD shot, I tracked footage of the stand-in actor for Robert Downey Jr. using PFTrack, created vector based design elements in Adobe Illustrator and dimensionalized them in Softimage XSI. I then rendered out the 3D elements from XSI using Mental Ray. I took these elements and composited them with the footage in Adobe After Effects. For the POV test, Andy shot some live-action footage at the Venice traffic circle and composited everything including creating graphics using The Foundry’s NUKE.

Jonathan Rothbart: For our tests, Dav Rauch was like, ‘Well, it’d be really cool if, because he’s inside the helmet, it just follows his eyes and figures out what he wants based on where he’s looking. So we did that to show that the HUD pretty much follows his voice command and his eyes. We had some really rudimentary shapes and then we did these tests of how it’d look if things flew in, and flew out as if they were coming in and out behind camera.

Dav Rauch: The hypothesis was that we’d need an ‘eye cursor’ to know that he was looking at things and controlling the HUD with his eyes. But then when watching it, Favreau realised that it was obvious what was going on and the audience didn’t need an eye cursor to explain that he was controlling it. That’s another one of those ‘duh’ moments that it made a lot more intuitive sense and needed a lot less explanation (if any at all). These tests show how low fidelity we were at the very beginning. The feedback we got from John Nelson was that he liked the energy of it. I liked that.

Kent Seki: John Nelson and VFX producer Victoria Alonso ultimately awarded all the HUD shots to The Orphanage, and the truth is, they deserved it. The Orphanage did a great early test, and I later heard that Jonathan Rothbart had personally helped conceive of the idea of doing them in the first place during early pre-production meetings. And they did a fantastic job. I can’t say enough good things about their commitment, contribution, creativity, and collaboration. Ultimately, The Orphanage hit it out of the park, and they were a real pleasure to work with for me. Jonathan Rothbart assembled a great team of artists and production personnel to work on the HUD.

Shooting Robert Downey Jr.

John Nelson: The live action photography for the HUD was the last thing we shot with Robert Downey Jr. The director of photography, Matthew Libatique, and I talked about whether if we shot it on a prime lens in 70mm, we would have more of an advantage than shooting it on Super 35.

Wesley Sewell: Shooting it on a 70mm negative meant we would have a super big resolution to work towards and work with because we didn’t know if we would have to push in close to make it work.

John Nelson: It was also about trying to get a depth of field close to Robert that wasn’t too distorted. We didn’t want it to be too ‘pin cushion-y’, or too wide-angle. Yet, we needed to get close to him.

Wesley Sewell: We found that there was sweet spot where it feels like you’re not too far from him, but you’re not too close to him – we tried everything. And sometimes it was right up against his eyes, and his nose, and you’re like, ‘But I can’t see his mouth!’ But when you pull back too far, it was, ‘Oh, now, I’m not really feeling like I’m inside the helmet.’

Jonathan Rothbart: On set there were these different light banks and we would call out directions and have Robert look at those areas. The idea was not only would it give him a place to look, but it would also give a little bit of light reflection on his face and eyes, which is what we were trying to get out of it. So, we had put these light banks on, to the left and out front to the left and right of him. And then as they went off, he would look in those directions. And then of course, he was being directed by Jon Favreau for his lines and everything else. But we had kind of established this light system for him to look.

John Nelson: Robert was looking at previs of the whole scenes. And if you get something in front of Robert to react to, he’s got ‘world-class’ reaction. He takes it all, looks at it, and puts it through the ‘Robertator’, whatever it is, and it just all comes out great. It’s like he’s in the moment. We used a beam splitter on the front of the camera so Robert could look at it and react, and see what the sequence was.

“Things just weren’t working”

Wesley Sewell: We had done a bunch of post-production and we were getting versions in from The Orphanage that were – I guess you could say they were very rudimentary versions of the HUD. And I think we all thought, John and I, and the director and producers, were thinking, ‘Well, these are just placeholders. These are just temporary and eventually we’re gonna get some really cool looking HUDs.’ And then a couple of months go by and we’re still getting the same thing. And so, that’s when we had a conversation with The Orphanage and they were like, ‘No, this is what we thought we were going with.’ And we were like, ‘Oh, no.’ And so, there was a miscommunication there.

Dav Rauch: We had started working on certain shots and I think things just weren’t working, it just wasn’t going well. We were getting kind of conflicting feedback and stuff like that and it was kind of confusing. We didn’t have a script and we didn’t have a cut. We really had no idea what we were designing for and when we started getting shots we would get little cuts but the security around the film was so tight. Then one day I came into work and there was a plane ticket on my desk. We were going down to meet Jon Favreau in LA [The Orphanage was based in San Francisco]. That was one of the big breakthroughs on the project.

Kent Seki: There were many rules and driving philosophies we established along the way that led us to the final product. I remember in an early discussion in post-production with Jon Favreau. He pulled out his iPhone, which was a new thing at the time. He said, ‘I don’t want to tell you a specific graphic to make for the HUD, but I want it to feel intuitive like my iPhone.’

Dav Rauch: The iPhone had just come out like literally a week or two before the meeting with Jon – and I got an iPhone and Favreau had gotten an iPhone. When I was down there we kind of geeked out on our iPhones, and we were talking about what we liked about the iPhone because he was really inspired by it. He was like, ‘What I love about this thing is it just kind of does what it should do, and it kind of does what I want it to do and it’s very intuitive and it’s very simple.’ We opened it up and I was looking at the transitions in an iPhone. I’m like, ‘These transitions are so simple and they’re just like zooming transitions, or wipe transitions. There’s nothing fancy about this phone, but what’s fancy about this phone is that it works and it works really well.’

In that meeting with Jon, he also said, ‘You’re not designing a prop, this is not a prop, it’s not a visual effects thing. You are designing a character. We’ve got this amazing voice talent [Paul Bettany] and we have this character that nobody sees. The only visual that we’ll get of this character is what you create. You are creating this character. And I can’t tell you how important that is.’

Kent Seki: As a director, Jon was great. He knew the feeling that the HUD needed, but also allowed for the artists to bring their own creativity to making it. He used this philosophy consistently throughout the process.

Dav Rauch: So when I came back from LA – and this is when Kent really came onto the film and helped us – we looked at the HUD and we said, ‘It doesn’t work’. We’d tried to do all this extra crap to make it futuristic and fancy, and actually all we really needed to do is make it work and if it works it was going to be totally believable. So when I went back to San Francisco I told the team, ‘We are throwing away everything.’ Well, not throwing it away, but we were going to question everything.

Wesley Sewell: Kent also took it on his own as the previs supervisor to go in on a weekend and he actually started saying, ‘Look, this is what it really should be.’ And he and I actually had some really great sort of discussions on that. And he literally went home for the weekend, came back on a Monday and actually had something that was really close to what you’re seeing in the design of the final look, so we were all very excited about that.

Kent Seki: After the shoot and as post production was rolling, it became increasingly clear that the level of the detail in the HUDs was going to be substantial. John Nelson and Victoria Alonso knew that I had an affinity for these shots and gradually got me involved. They then very generously offered to get my involvement overseeing those details under John’s guidance. I slowly began offering feedback to The Orphanage and developing a working relationship with them.

Dav Rauch: We said, ‘If it doesn’t do something that would be real for somebody flying around in this suit then we are taking it out.’ Because we figured that Tony is an engineer, and he’s going to put stuff in there that works. That’s what engineers do, they make shit that works. And so everything has to have a purpose and everything has to work and if it doesn’t work then it’s out. That’s what we did and that’s when things really turned around.

Kent Seki: We knew that the HUD was supplying information to Tony. There were three different ways he could get this information. First, Jarvis could present it to him both visually and verbally. Or, he could verbally ask for it. And thirdly, he could use his eye movement to bring or activate aspects of the HUD forward. This was a technology called ‘artificial foveation’. We used these different methods to drive how widgets and information were presented to Tony. The single most important thing about each element in the HUD was that they had a specific purpose. The audience would never know all of this backstory, but they would ‘feel’ them as long as we stayed true to form following function.

Dav Rauch: I had actually asked Jon about what Tony is looking at and is he responding to it, or is he asking, or is he looking over there and J.A.R.V.I.S. is responding to him? He thought about it for a while and then he looked at me and said, ‘They’re having a conversation and they’re in the dialogue, and sometimes RDJ is asking a question to J.A.R.V.I.S. and other times J.A.R.V.I.S. is asking a question of RDJ. Or one is saying something to the other, or sometimes the other is saying something to the other.’ And here’s the thing, Jon said, ‘Look into his eyes. If you look into his eyes you will know. Is he being asked a question or is he asking the question?’ I was like, ‘Oh my God, that’s fucking brilliant.’ I went back and I looked at RDJ’s eyes and I was like, ‘Is he being asked? Is he asking a question or is he being asked a question?’ I looked in his eyes and I was like, ‘I think he’s being asked a question in this one.’ I would do it and it fucking worked. I feel like that’s what a great director is.

The untested art of Iron Man HUD design

Dav Rauch: We knew that there was a few different suits, that we need to make at least two or three HUDs. There was the Mark II and the Mark III, and the Mark III was just an upgrade of the Mark II. Then there was the Iron Monger, worn by Jeff Bridges’ character.

Jonathan Rothbart: We basically had to write a graphic script for the whole movie in order to get it done. We had this huge thick binder that Dav would carry everywhere he went, that had every shot and the progress of the suits. It would show how one graphic would then eventually turn into another graphic, which would then eventually turn into another graphic, with the timeline of all that shift and design.

Dav Rauch: I remember thinking, I really need to squirrel away all these good ideas for the HUDs because I imagined that once I developed the Mark II HUD that then when it was time to do the next version, I would have already put my best ideas in the Mark II!

Jonathan Rothbart: Graphics are sometimes, if not always, harder than other visual effects because they just, it takes so much work! The funny thing about graphics is you can’t put it in words, it has to be symbols because it goes by so fast. And trying to figure out right symbol to portray what you’re trying to get across at that time, and have it come by in a flash so people will understand it and tell a whole story.

Kent Seki: For the Mark II, the UI is accessed through different widgets that line the bottom of the interface or dock. It was like a dashboard on the Macintosh or on a PC. It had five icons on the bottom and each one stood for a specific function like diagnostic, flight, power, radar or navigation. Tony would look down and activate that function. When activated each widget then changed the mode in which he is operating. For example, in the garage he was in what we called ‘Analysis Mode’ and then he started to power down. But he changed his mind, and requested J.A.R.V.I.S. to do a weather check and ATC check. At that point he changed into ‘Flight Mode’ when the flight bubble came up and the UI definitely shifted around and converted to a flight based HUD. This HUD was primarily cyan in colour to reflect the current trend (at the time).

Dav Rauch: When he first starts flying, we knew Tony Stark was kind of a dorky inventor, he was kind of geeky. He’s kind of cool and hip and funny and trendy and all that but at heart he’s a tinkerer. I was thinking about, well, what I know about engineers and tinkerers is that they want it all. They’re not going to make a really specific, minimal design because they’re too into having all the options available to themselves. So we actually intentionally made that first one, the Mark II, really kind of super busy, with lots of detail, lots of numbers, lots of information. Our idea was that he’ll evolve, he’ll get more sophisticated, he’ll realise once he’s flying around that he doesn’t want all that shit and he kind of can’t deal with all that shit and that he’s going to want something much more simple, paired down, elegant and minimal as a second version.

Jonathan Rothbart: We had looked at a lot of reference. I mean, fighter jets have HUDs, but they’re pretty much static with all the information out there in front of you at all times, in a fixed place. We wanted it to be moving, but we also didn’t want to make cluttered. Which is why we kind of came up with this concept of things flying in and flying out, so that we didn’t overload all this data in front of his face while he was talking and acting. It was also about adding in a Tony Stark design flare to it, but making it kind of obvious and intuitive.

Kent Seki: For the Mark III, we had to take the Mark II HUD and elevate it. It took a while, but we eventually came up with the next evolution. We decided that for the next incarnation, Tony would get rid of the different mode switching that was in the Mark II HUD. Instead, the HUD would have one mode that would allow him to access each mode as an expert user. In order to facilitate this, we came up with a different UI strategy. The concept here was that in the next iteration, Tony would have consolidated the widgets that used to line the bottom of the HUD (power, health, targeting, diagnostics, etc.) into one larger widget so that his eye movement would have to cover less ground to activate the various sub-widgets. It was just more efficient. When we discussed this concept, we tasked Dav and his team to develop an exploded keyhole design concept.

Dav Rauch: We figured, well, there’s probably like two to four things that he would want all the time. He’s going to want to know about the flight, he’s going to want to know about his health, his health was a big plot point with that energy thing that he’s got in his chest. So we came up with this Omega widget that would have a little bit of each of those things.

Kent Seki: The idea was that the widget was constructed of a 3D interface that locks together like a keyhole. When each specific widget was deployed, the keyhole turned, unlocked, fanned out, and released the sub-widget. When the task completed, the Omega widget collapsed back into itself. It was a mechanical visual in a digital form. We liked the idea of unlocking its various functions. It was the Wesley Sewell who had the brilliant idea to change the colour palette of the HUD from cyan to predominantly white with colours to accent information when Tony upgraded to the Mark III.

Jonathan Rothbart: When we got to the Mark III, we just started talking and we were like, ‘You know, let’s just say cyan is passé,’ and we ended up going with white because nobody had really been dealing with white graphics. So wanted to just do something more unique and different in that way when got to the Mark III suit.

Kent Seki: And with the Iron Monger HUD, there was a third iteration, of sorts. Obadiah Stane’s version would represent the most basic HUD, a military grade one without any of the UI bells and whistles that Tony would have designed. This was reflected in its most basic one dimensional space, the intentionally unimaginative choice of OCRA as its primary typeface. The monochromatic red colour is evocative of its intended military usage.

We thought of it this way, Obadiah probably stole Tony’s underlying tech for the HUD, maybe the operating system, but not the UI that ran it. It, once again, reflected the different characters of Tony Stark and Obadiah Stane. Tony really was the master inventor who would, of course, create an elegant UI to drive the operating system of the HUD. Obadiah would not have the time, patience, or skill to create that UI and would settle for the most basic version.

The right tools for the job

Jonathan Rothbart: The final HUD graphics were all composited in NUKE but the individual elements that had animation to them – the graphics themselves – were done in After Effects. The graphics flying in and flying out and moving to different parts of the screen were done in NUKE, which means they could go all the way around his head and fade on and off. So we needed all that 3D space and NUKE was the only way we could really have it in 3D space and composite at the same time.

It was either going to be NUKE or Maya, but we really didn’t want have to go into Maya necessarily to do it. So, we ended up trying it all NUKE, but it was a beast, it’s not really what NUKE was designed to do then. Luckily, we had an amazing compositor in Kyle McCulloch. Kyle just has such a great mind for compositing and has just such a skill with NUKE and scripting and everything else, he really just has an amazing eye.

Kyle McCulloch (compositing supervisor, The Orphanage): Oh God, it was hard. In hindsight, we learned a lot. I don’t think we understood at the time, and certainly now after another decade of working with NUKE, I’m much more familiar with it. But at the time, I don’t think I, or anyone at The Orphanage had a real good knowledge of how NUKE manages memory, and we were using the 3D system, which is very memory intensive. We kind of got backed into this corner of rendering, where we were doing a lot of really expensive things. And the comp and the thing that we had built to drive the HUD was so dense that you were sort of 10 layers deep of pre-comps of building eye reflections and putting in different things and depths and then blurring them and having motion blur and all these things.

That was right around the time when The Orphanage was starting to move fully to NUKE. The Orphanage was famous for being an After Effects shop, so it was, in some ways, heretical that we were moving to NUKE. I had been using After Effects for 10 years, that was where I did all my motion graphics work, I was very comfortable with it. But I was also a seasoned NUKE user at that point. We were doing those early tests of the different informational units of the HUD, and where do they move and how does it work, and I kept finding myself reaching for something that would allow me to drive a really three dimensional looking performance out of the elements. After Effects certainly has, and at the time, had a 3-D system of sorts, but it was always a bit of a cheat. After Effects didn’t have an actual representation of 3D space and to work in real depth, whereas NUKE did.

Jonathan Rothbart: We were definitely pushing the envelope of what NUKE had, it was still very early for 3D in NUKE. I mean now you can put all sorts of geo in NUKE and projection map on it, but at the time, it hadn’t really done that level of 3D. Still, it was tough to do. What really killed it was the animation. We had to have the motion blur just turned up to maximum otherwise it stepped. There were many, many long nights where I finally just said, ‘Screw it, I’m going home, it’s 3am,’ and Kyle was still there working on it. The motion blur was just a beast because we had all of these layered elements that were blurring through frame and coming in and coming out, which means, when they’re moving that fast, you have to have so many steps to keep it smooth. And it just was breaking NUKE’s brain.

Kyle McCulloch: When we were doing those early movement tests and early layout tests, I was running a setup in both pieces of software. After Effects definitely had an upper limit in what it could manage in terms of memory and in terms of rendering. NUKE was more powerful. The real deciding factor was because Nuke had kind of a real 3D system, and we were also able to drive the reflections on Tony’s eyeballs live with reflections and projections inside of NUKE, as opposed to having to do something separate in CG and then comp it in After Effects.

Kent Seki: I think the success of the depth goes back to John Nelson’s second of three rules to use the Z-space, especially that of the implied graphics and elements that go beyond the shot’s field of view. For us the depth also included the movement of the HUD. Meaning, using the way the HUD graphics moved and got attention/activation were used to create depth. Also, the graphics lock to his head movement, but The Orphanage built in a way to both offset and dampen this movement to give a little play to the graphics as Tony moved his head. In addition, The Orphanage put a reflection of the graphics in his eyes. As the graphics were designed, attention was paid to how to dimensionalise them and place them on differing planes. That way, when the camera or head moved, you would get more parallax. Finally, with the shallow depth of field, also created a sense of depth as well. It was quite tricky to realise. And it required a great deal of trial and error.

Dav Rauch: The first thing what we had to do was a matchmove on the plates to understand where RDJ’s head was. Then we needed to matchamate his head because we had to attach the HUD to his head. Then we needed to also matchamate his eyes since you needed to know where he was looking and when he was looking at things. And then what we did was, we built the HUD and we attached it to his head. We didn’t absolutely attach it to his head. We kind of matched it to his head. When there was times when the suit was exploding and it was under stress we actually made it lag a little bit so it wasn’t quite keeping up with his head. We added this control so that we could have it really tightly match to his head movement or so that we could loosen it up a little bit and start to have bugs in it.

Then we put two cameras where his eyes would be – those cameras were looking out and recording the heads up display. So we had a front camera, we had the heads up display, then we had the eye cameras. We animated the heads up display, we recorded it by the two cameras in the eyes and then we used those two cameras to re-project what they were seeing onto eyeball geometry, so that we could create both the heads-up display that was floating around his head and the eye reflections all at the same time within the same rig. It was kind of insane. We had these two totally different rigs, we had an After Effects rig for the POV stuff and then we had the NUKE rig for the RDJ stuff.

When I was designing I would design in After Effects and in Illustrator. And we would animate the elements in After Effects for the POV shots. Then we created these animations, so for example of the altitude or the yaw, and we would do 360 degrees of an animation, and weird output frames. And then we’d bring those assets into NUKE. Then as the animations change, the yaw or the altitude, we would just create an expression for it that would reference the frames that we had rendered out from either After Effects or from After Effects with the Illustrator assets. Then that would animate in NUKE.

Kyle McCulloch: So, I remember we had some frames, like a single frame, would take 48 hours to render out of NUKE, which is insane. That’s actually not a way that you work. There are 100 ways to optimise that or to break NUKE apart more and to make it easier to use. But at the time we were just a lot of the time brute forcing it and letting things render for two days straight, because we didn’t know any better and we didn’t have any more time to figure it out.

The opening mask shot, and what could have been

Kent Seki: I have to say that ‘First Flight’, in which Tony dons his silver Mark II suit, is one of my favourite parts. In the beginning of the sequence, you see components of the armour being applied, followed by a POV of the mask coming up to his face, then the very first HUD shot of Tony as the graphics turn on. This is the moment where the HUDs could succeed or fail. Luckily for us, we got things more right than wrong. The audience was with us.

John Nelson: For that mask shot, we couldn’t figure out how to get inside this virtual space. So we said, ‘OK, we’re in a real space,’ and Wes Sewell had this mask in his hand and I was shooting camera, and we said, ‘OK, just bring it up to the camera, and that brings us into the virtual space.’ And then on that shot we put in some graphics over the eyes so you knew it was sealing off. Then come around to the other side of that, and you see Robert and he’s going, ‘Okay,’ and then the whole thing wakes up.

Kyle McCulloch: I did hundreds and hundreds if not thousands of versions of that shot over the course of almost a year and a half. And so, it’s no different than any of the other HUD shots, but every time I see it, because that was the one that we got finally, where after all the months and months and months of building and throwing things away and trying different stuff and reworking it, that was the one that we got it. And Jon Favreau and Victoria Alonso said, ‘That’s the HUD, you’ve done it, that’s it.’

Dav Rauch: But actually there was this whole sequence when he first puts the helmet on that was storyboarded and shot and everything and we kept working on it over and over. It’s a whole scene that nobody’s ever seen in the film, and the whole point of it was to explain what this HUD thing was. If you think about it, the HUD is a thing that no-one’s ever experienced, with these impossible camera angles on Tony’s head. So they storyboarded this whole scene that attempted to explain it by saying there were these little sensors in the mask that are scanning his face and watching his mouth and doing all this stuff so that he could have like a video conference with people while he’s flying that they would want to see what he’s doing and his head just like you would want to in a video conference, with the suit tracking his face.

But when they started testing the film on test groups, they showed it to them and they’re like, ‘Oh duh, it’s a heads up display,’ and they’d ask the test audience, ‘Well, what does that do?’, and they’re like, ‘Duh, it’s the view of him operating it.’ So it was just made more confusing by trying to explain it. Even though the heads up display was something that nobody had ever seen and totally didn’t exist, there’s something about it that seems so ‘right’ based on what we want that people saw it and they were like, ‘I know what that is. That’s exactly what I want. It’s the thing that tells me all the things that I want to know about the world around me. How awesome would that be. I understand it and I want it.’

HUD-ing into the future

Kyle McCulloch: We were down into the final minutes of this thing trying to get it out and still taking on design changes and notes and updates. We had the tiniest of render farms and a handful of artists sitting in these poorly air conditioned rooms. I still joke about this now, that it’s been years since I worked all the way through the night and all the way through the next day, but that was a pretty common thing on Iron Man, where it was just around the clock trying to solve the problems that we had never tried to tackle before and get things out in time for the film.

Kent Seki: I would like to say that working on Iron Man was a true honour for me. I can’t overstate how much I learned from and appreciated the entire VFX team, especially John Nelson, Victoria Alonso and Wesley Sewell. Jonathan Rothbart’s team at the Orphanage led by Dav Rauch also deserve the recognition for their amazing work. Robert Downey Jr. gave us all the gift of his performance to drive our shots. All of this was under the leadership and guidance of Jon Favreau. No one person had all the answers as to how to pull off these shots. It was the successful collaboration of all the players that created the final product. The opportunity to work on the HUD shots was a dream come true. It was an experience that I won’t ever forget.

Dav Rauch: I remember being super inspired by Minority Report and still am. I always thought, there’s something about Minority Report that seeped into the zeitgeist, into people’s imaginations in a deep way that left a mark. I remember thinking, ‘I could die a satisfied man if I worked on something that also had that same effect.’ I totally didn’t think that Iron Man was going to be that. I really didn’t think it would do that. I hope that it has, I mean I really hope that it has. I think for certain people maybe it has. A few people have told me something to that effect. If it has in fact done that, that is the greatest gift, that is the greatest thing.

Wesley Sewell: I got to work as the stereographic supervisor on some of the subsequent Marvel films, which is where we did Iron Man’s HUDs in stereo. It was like the perfect place for both looking at the characters and also the POVs as well. We could really have some fun with that. By then, Cantina Creative was involved in making the HUDs and they did such a great job.

Kyle McCulloch: It’s been interesting to reflect on how Marvel operated then on that first project with Iron Man, and how that has sort of become the Marvel way of doing things over the last decade. You see characteristics of how that show came together and how that’s now becomes their workflow. I mean, they were very nascent as a studio. They didn’t have the big art departments in-house working for things. But they were only just very beginning to start this development, what the Marvel visual language was going to be. It was a unique project that’s really burned into my mind. I’m not going to forget it any time soon.

John Nelson: What the HUD really helped with is bringing everyone back to showing that there was a human in there. Because you could cut inside, and then Robert would be inside, and you’d go, ‘Oh, it’s Robert, I get it.’ It’s not just a faceless Iron Man.

Wesley Sewell: The good news is: it worked. I mean, again, we were so concerned, and everybody was so concerned. Until you actually cut it into the film and you start watching the sequences, then we knew. And the fact is, it worked, and we were so pleased.

Jonathan Rothbart: I’d be lying if I didn’t say I took a little pride in our work and definitely see all these other films and people are referencing it since, and have it in their mind as their trying to create the stuff. It’s cool to create stuff but when people want to re-create and, I wouldn’t say, ‘rip off’, but it’s obviously a neat compliment.

Kent Seki: And as far as Easter eggs go, I would encourage any viewer to go back and rewatch specifically the HUD shots. While doing so they should ask themselves these two questions: What is the story of THIS shot? What is the Alpha moment of this HUD? By answering these two questions, you will reveal the “easter egg” in the HUD whether it’s the flight paths and patterns for LAX, the flight altitude record set by the SR-77 Blackbird, Rhodey’s contact information when he calls him during the dogfight, or how his power level is doing during the third act.

Previously published on vfxblog.com.

Illustration by Maddi Becke.