A journey into making a digital human for a VR experience with just the right tools.

When television and interactive production company Cream Productions was looking to adapt TV celebrity Les Stroud’s Survivorman series into an interactive VR game, they needed a way of ‘digitizing’ and animating a realistic digital double of Stroud. Not only that, this virtual reality character of Stroud had to perform optimally on the Oculus Quest.

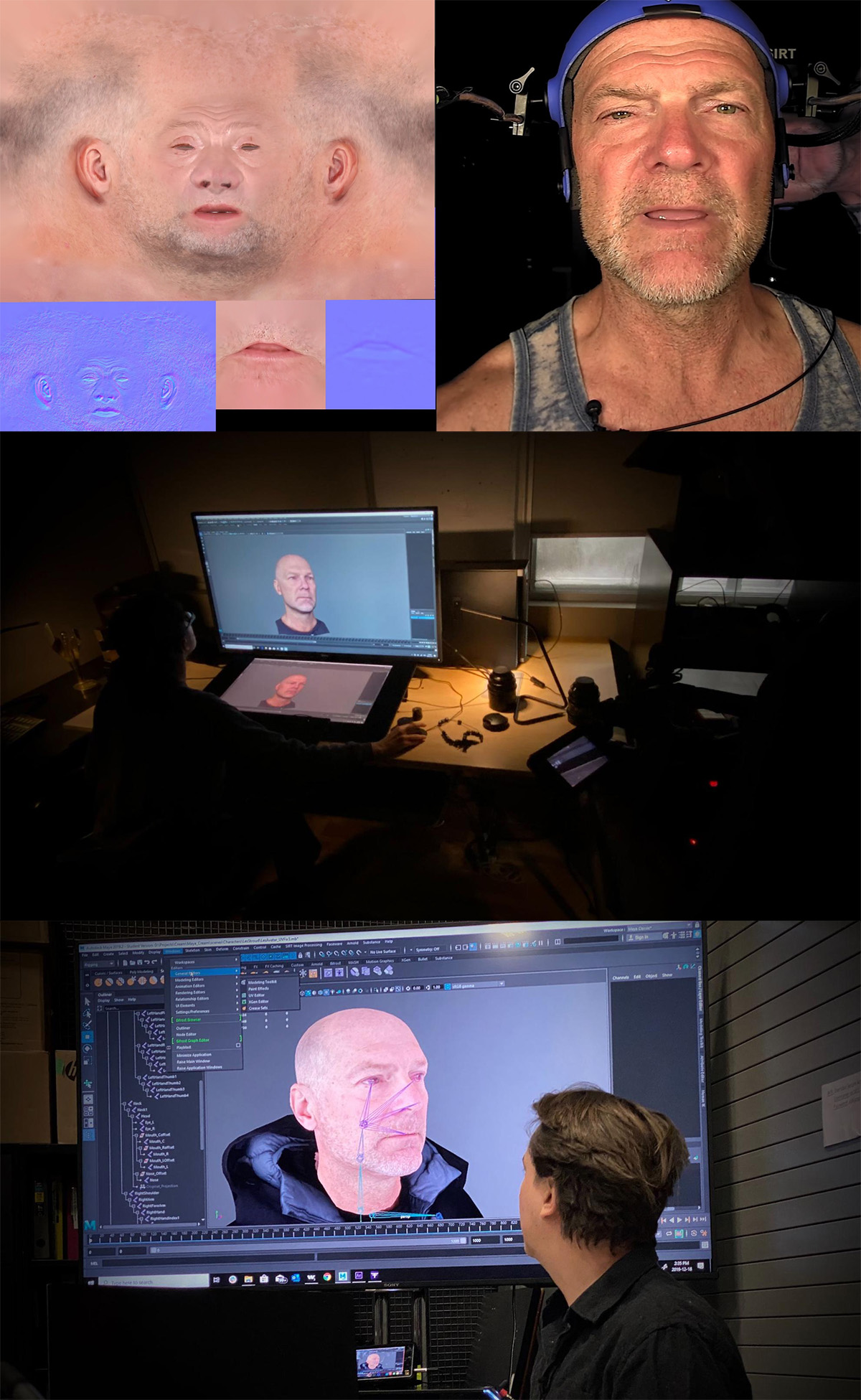

Partnering with Sheridan College’s Screen Industries Research and Training Centre (SIRT), Cream initially oversaw a complex photogrammetry scan and rigging of Stroud, but soon realized they needed a more efficient–but still just as convincing–approach to the CG human.

That’s when they turned their minds to Reallusion’s Character Creator and iClone for the task, using those tools to craft Stroud as a virtual reality character. The resulting game from Cream, Survivorman VR – The Descent, is now a single-player interactive VR game for Quest and PCVR that sees players actively having to survive a realistic survival simulator set in natural–and dangerous–locations around the world, under the guidance of an avatar of Les Stroud. It is due for release in early 2023.

befores & afters caught up with Cream executive producer Andrew Macdonald and Sheridan College creative research lead Stephan Kozak to discover more about the journey to create the Survivorman VR experience.

b&a: It seems like, for Survivorman VR, you were on a bit of a journey to find the right ‘way’ to make this. What is the process you took to get there?

Andrew MacDonald: Well, firstly, Cream is a television production company that’s been around almost 20 years. We produce television that’s in the docudrama-reality area. The owner of the company, David Brady, he’s a very forward-thinking kind of guy. He’s always into the latest, newest technology. Someone had come along and put, I think it was a Paul McCartney concert in 360 degree video on his face in 2015. He was like, ‘This is crazy. I want to be able to do this.’ That’s where I came into the picture, because I was a freelance camera shooter-director guy who shot some of these television series that they produced. I said, ‘Why don’t we carve out a little experimental incubator budget and we’ll build some GoPros and shoot a 360 short?’ And we did.

We started with this homage to Hitchcock where we recreated Janet Leigh getting stabbed in the shower scene that Hitchcock had done in Psycho. Back then in 2016, you had to build your own VR cameras, so we built our own and we shot this film and we were able to stick a camera in a shower and still be able to stitch it together without stitch lines.

From there we got invited to Cannes, with a 360 horror film. Then it was off to the races for the next year because everyone was like, ‘Oh, neat, 360 video.’ I noticed that there was no real revenue model in 360 video, as we all discovered very quickly. There was no real platform. They didn’t go out on the traditional television sets and everyone could see it for free on YouTube, so it just didn’t seem to have any legs, really. But at that time, the Oculus, the first DKs were coming out, and I was looking at that as, ‘This is where this is going.’

b&a: So, you pivoted to VR?

Andrew MacDonald: In Canada, we have this rather robust content-creation funding system. We were successful at securing some of these funds to create content in game engines for VR. We started with host-driven content, which we knew we were good at. We had a show called Wild Things, which was one of the actors from Lord of the Rings–Dominic Monaghan–who would run around the world checking out snakes and spiders. So we went down that path of, ‘Let’s make some volumetric content and use some of these celebrities we work with and put them in as digital characters.’ Resulting in the VR sci-fi piece “dark threads” on steam.

We quickly realized that making digital doubles of real people that you’re selling as ‘a Hobbit from Lord of the Rings’ is really tough to get it accurate and playing well. Then, on top of that, trying to stick it in stereo into an Meta / Quest mobile processor headset and get it all running smoothly is a monumental task.

That’s where Stephan comes in, because we had been introduced to Sheridan College in Toronto, which is quite world-renowned for digital content. They have an innovation programme there called SIRT, which is their Screen Industries Research and Training Centre. We accessed a small government fund to do an introductory programme there where we pitched this idea of projecting video on a blank slate of a face and seeing if we could just create a character from that. And we got a decent result. Stephan was smart enough to go, ‘You know what, there’s some potential here. Why don’t we try to do this with an animated mesh and see if we can combine a video of the face, and sync it with the animated face.’

b&a: Stephan, do you want to talk a bit more about that side of things?

Stephan Kozak: Sheridan College’s SIRT is at Pinewood Toronto Studios. We’re in a 10,000-square-foot stage on the lot. When I started at SIRT, I led the virtual human development which includes animation, CG and visual effects. We were exploring creating digital humans, their people. It was all about performances and our interactions with them. We were also exploring how story can create an emotional awareness between the content that we’re creating and the audience.

At SIRT We work with and help companies explore technology and help them innovate production workflows and experiences. That’s where Cream comes in. They asked, can we make a digital double for less? We said, ‘There’s got to be a better way of doing this.’ We started with the facial projection to see how we could capture a person’s performance and reproject it.

b&a: How did it grow from there?

Stephan Kozak: Well, since I came from the production world, I know about the production pipeline. You need to implement one if you’re going to create something that’s repeatable, something that is commercially viable for someone else to use.

So, that’s what we did. The whole thing was, how do you make this as automated as possible, and make it as friendly as possible so that anyone can do it? That was the beginning of the journey. We relied on Faceware for facial capture, for their robust animation and retargeting tools, and then with Reallusion to automate the character pipeline in Character Creator, to create and re-use blend shapes, textures and skeletal systems.

b&a: Ultimately, then, for the Survivorman VR project, you needed to create a believable digital Les Stroud using this research you’d been doing. How did you bring that character to life for VR?

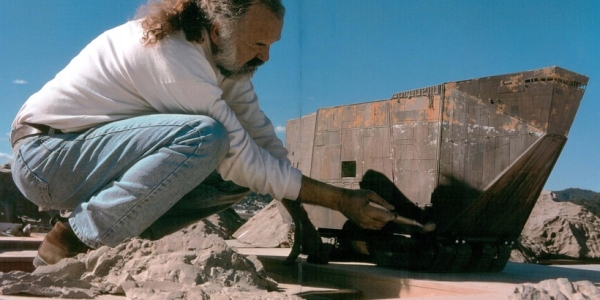

Andrew MacDonald: Survivorman was perfect timing because we had really honed down the whole pipeline. The inclusion of Reallusion happened just prior to making the Les Stroud character. It solved a whole bunch of things for us. For our previous character, we were actually making Dominic Monaghan and we used photogrammetry to scan his body. I think we spent about $40,000 sending it off to a company to do this and get it all rigged and set up with pose shape deformers and everything and get this whole body working, which we ultimately just threw in the garbage, and started using Character Creator features instead.

Our process is strictly about the face–the eyes and the mouth and the nose. Now, with the fact that we can plug everything into Reallusion, this means that everything else like clothing, grooming, body shape, is all taken care of. Now that we’ve got this pipeline that creates really lightweight, efficient, digital doubles that work on the Oculus Quest, and we have the celebrities attached to the projects, it’s a nice tight, tidy circle that we are able to put together to make a show like Survivorman VR.

b&a: Stephan, what was the technical process of getting Les Stroud into a CG character in terms of capture through the use of Character Creator and other tools?

Stephan Kozak: We actually did both, the traditional workflow and the new workflow with Les. We started with the full photogrammetry cycle by capturing 52 different facial positions to get all the required blend shapes. Then, we started using Reallusion, and realized the difference was minimal, so we said, ‘You know what, let’s just use the Reallusion blend shapes and make this as efficient as possible.’ So in the end we realised that we only needed a single head scan, a neutral expression to get the proportions and shape of the head, and we did that with an inexpensive structured light scanner.

Andrew MacDonald: We can even use an iPhone 13, with a bit of clean up we can basically get the same results.

Stephan Kozak: We brought that scan into Character Creator and then used the ZBrush exchange tools to do some little fixes to the positioning and add extra micro detail, and then brought it back into Character Creator. The ability that Reallusion has to connect with other software and tools without having to leave Character Creator, I think, was the thing that made it a no-brainer for us to use in our pipeline. Especially when you’re matching the way characters speak, there are a lot of nuances to facial positions. We were able to do that relatively quickly with just one neutral face shape and referencing it to a range of motion (ROM) performance.

b&a: What tools in Character Creator and iClone and other Reallusion products were you particularly taking advantage of?

Stephan Kozak: Well we use all the tools. We start with the character building and skinning tools. We also used ActorCore because that has a really good collection of animations. We used that in conjunction with Les Stroud’s motion performances. The neutral and other idle or generic animations are from ActorCore.

We made our clothes in Marvelous Designer and ZBrush, then we transferred the skin weighting seamlessly into iClone. Not have to worry about re-skinning or bad weights, that’s one of the advantages of working with Reallusion, for sure.

Another thing that we’ve been testing is adjusting the meshes on the bones and automated speech in iClone. Our next steps are using machine learning to create novel performances from previously animated performances.

The hair tools are also great. We actually had hair on Les Stroud as well. We used the fur grooming in Character Creator for his beard and facial hair.

b&a: I guess what you’re talking about overall is finding the right level of fidelity with a digital character, but one that doesn’t take up so much time that it’s unachievable or unaffordable. I mean, I assume you really don’t need to do a Spider-Man/Tom Holland-level CG human?

Stephan Kozak: That’s right. And you can’t get that level of detail and quality yet to run on the Quest. So the approach we came up with, and using Reallusion to do it, was just perfect.