Customized Shutterstock content will be trained with NVIDIA Picasso generative AI cloud service.

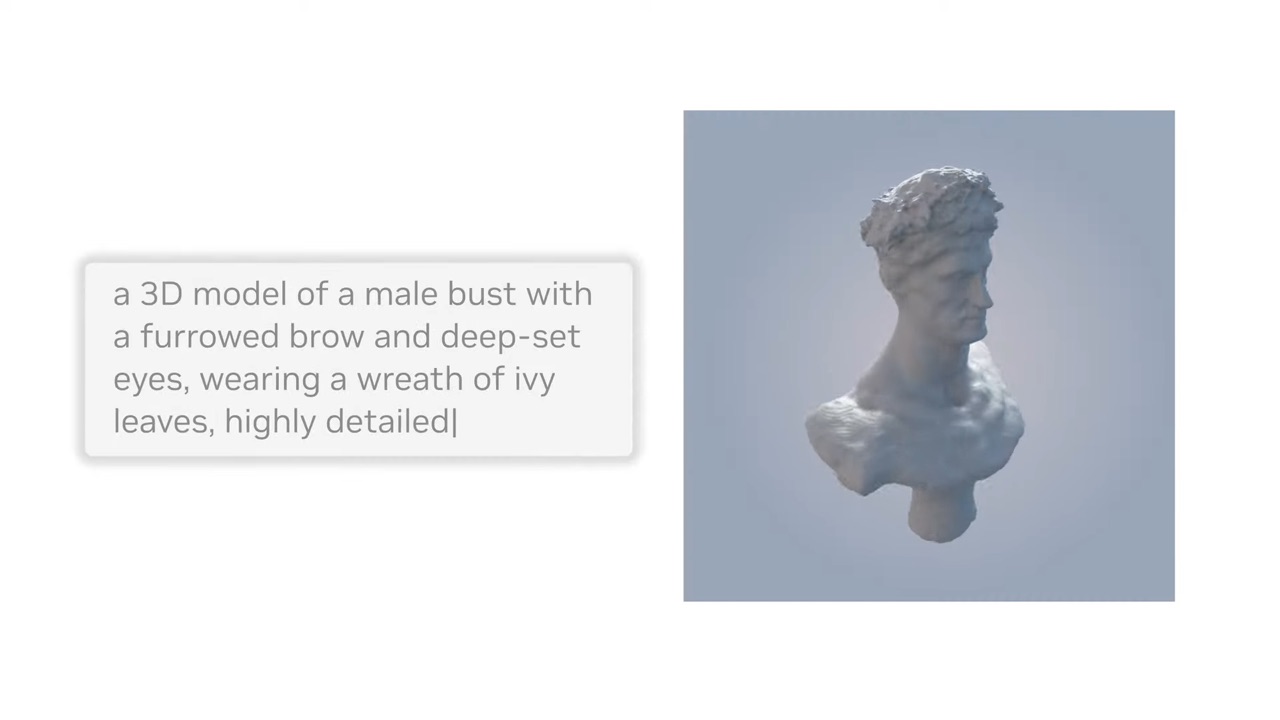

Things move fast in AI right now. Shutterstock and NVIDIA have announced they are teaming up to train custom 3D models using Shutterstock assets to create generative 3D assets from text prompts.

NVIDIA’s Picasso generative AI cloud service will be relied upon to convert text into high-fidelity 3D content. The idea is, software makers, service providers and enterprises can use Picasso to train NVIDIA Edify foundation models on their proprietary data to build applications that use natural text prompts to create and customize visual content.

In Shutterstock’s case, the models will be available on Shutterstick’s website. Furthermore, the text-to-3D features will also be offered on Shutterstock’s Turbosquid.com and are also planned to be introduced on NVIDIA’s Omniverse platform.

Generating ‘useful’ 3D models from text prompts has been something artists have been talking about ever since generative AI art made it into the mainstream. befores & afters asked Shutterstock’s VP of 3D Innovation, Dade Orgeron, more about the NVIDIA partnership, including how it works, and where artists and attribution fit into the process.

b&a: From the moment people were jumping onto different AI and ML tools from last year or even before that, that idea of having a 3D model, but also having the mesh of it and having it rigged and being able to manipulate it, was something all my VFX and animation and 3D friends and myself wanted to see. But everyone also realized that that’s hard.

Dade Orgeron: It is.

b&a: What are the technical hurdles to get over to enable those things to happen?

Dade Orgeron: Well, I think the first iteration is going to be quite simple. The first iteration will be detailed models. That’s probably one mesh, not broken up into semantic parts. It’s probably going to have simple textures, probably not materials. So it has a ways to go for sure. But you have to start somewhere. And we want to be there at the beginning to figure out how this is going to work for a variety of different artists.

One of the things that’s really important is that TurboSquid is now part of Shutterstock. I came along with that acquisition. I was with TurboSquid for nine years before that, and we worked very closely with our contributors who work in a variety of different ways to build 3D content, lots and lots of 3D content.

The problem has always been the same. It’s always been this sort of walled garden. It’s really, really hard to learn 3D. It’s really hard to manipulate 3D. Even if you become a master, you’re still probably a master of one or two facets of 3D and you can’t do other things.

And so really this isn’t an opportunity, per se, to completely take 3D out of the hands of the creatives. I think this is an opportunity to make it easier for 3D artists to be able to create content more quickly. If you can have a mesh generated for you and then you can worry about breaking it into semantic parts, you can worry about how you want to texture it and so forth and so on, maybe even using AI tools for materials and texturing later in your pipeline, we think those are all opportunities that are really amazing to enable 3D artists.

It’s really for us as we look at this quiver of artists that are around the world who are part of our contributing network, we look at all the different ways that they work and we want to simplify that and make it easier for them to create content and make money.

b&a: What are the images being trained on? Tell me about the Shutterstock/TurboSquid database and where you’re training this from.

Dade Orgeron: So essentially that’s what this deal is all about. It’s basically taking all of our data, 3D, image, video, and using that in order to train these models. One of the really interesting things is that Shutterstock, along with TurboSquid, along with Pond5, we have not just a massive library of content, but we have a massive library of data that goes along with that content. That data is very valuable for machine learning. And so it adds a tremendous amount of value to each asset as it’s able to be used for training.

The thing is that what we wanted to make sure, with over 20 years of experience with licensing and copyright and understanding the value of a contributor, what we wanted to make sure was that we’re at the forefront of that. That we were then able to make sure that we gave everyone the opportunity to opt out, that we would then take data and make sure that people weren’t able to actually reuse that to create even more royalty free content. We really wanted to be in control or mindful of the way that we were able to reward contributors for being part of this journey. So we’re really sort of at the forefront of figuring out what are some of the rules that go around AI-generated imagery.

b&a: Is that something you’re still figuring out? I think my artist readers will immediately ask about attribution and compensation. Where is that at the moment?

Dade Orgeron: We have a creator fund that we’ve started that will actually pay those contributors back. I think right now we haven’t determined whether it’s every quarter or every six months or twice a year. But regardless, there’s a contributor fund that actually goes back to anyone who’s contributed for those data deals and whose work then goes into additional content. So it’s a very fair, very ethically balanced way to say, hey, you’re contributing to this, you should be getting paid for this.

b&a: Obviously it’s a deal with NVIDIA. Tell me more about what NVIDIA brings and why that’s important to be able to use their Picasso cloud initiative to do it.

Dade Orgeron: So clearly, NVIDIA is well ahead in the AI field. And they should be. I mean, they’re not just offering tools and knowledge around AI, but they’re actually offering infrastructure for that. That was a really important decision or really important factor to help us make a decision on who’s best to partner with on these kinds of things, especially for our 3D.

NVIDIA understands 3D very, very well. Our visions are very much in line with 3D specifically. And they’re moving at the pace that we feel like we want to move at as well. We move very quickly for image generation. We’re now going to move very quickly as well for 3D.

The relationship with NVIDIA goes way back. We’ve been working with NVIDIA for many, many years. We’ve worked very closely with THE Ominiverse teams. We work closely with their SimReady team now to make sure that there’s content ready for simulation. We know very well they do the amount of content that’s needed for things that may not be what we were normally considering our customer base.

There’s an entire field out there of researchers who need 3D content for simulation, for machine learning, and for even more AI tasks. We are looking at, how do we fulfill that? We know that there’s just simply not–at the tools and the rate that artists are working now–there’s just not enough to go around. So we really need to enhance and make those tools better. NVIDIA wants to solve the same problem.