All about Manex’s approach to image-based rendering on the film, from a member of the team back then.

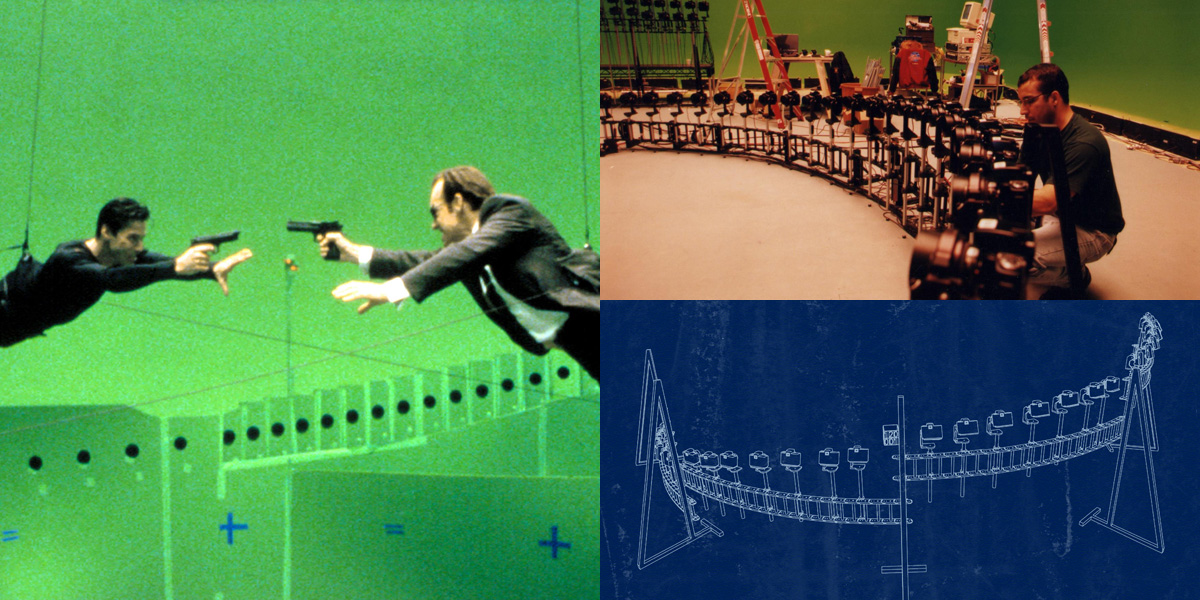

Over the course of several projects, Manex Visual Effects (aka MVFX) became renowned for innovations in image-based rendering. These techniques helped craft the virtual backgrounds in The Matrix’s bullet-time sequences, and were seen again in Michael Jordan To The Max and Mission: Impossible II (the John Woo film is celebrating its 20th anniversary this week).

The principals behind these virtual backgrounds developments were Dan Piponi, Kim Libreri and George Borshukov (who had earlier been involved in image-based rendering research with Paul Debevec).

In 2001, Borshukov, Piponi and Libreri were recognized with a Academy Scientific and Technical Achievement Award for “the development of a system for image-based rendering allowing choreographed camera movements through computer graphic reconstructed sets.” (They later also received one for their Universal Capture developments on the Matrix sequels done at Esc Entertainment).

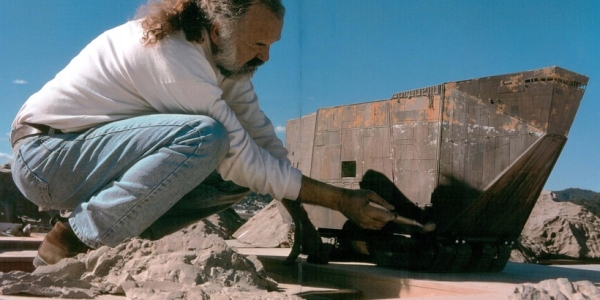

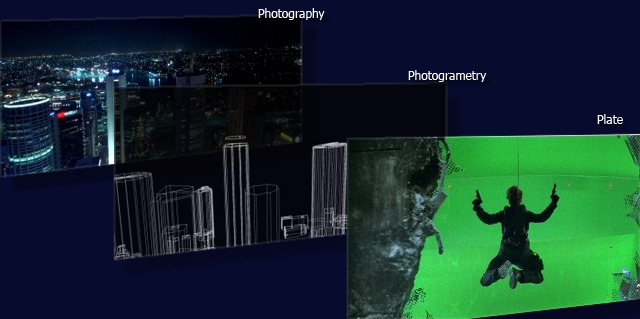

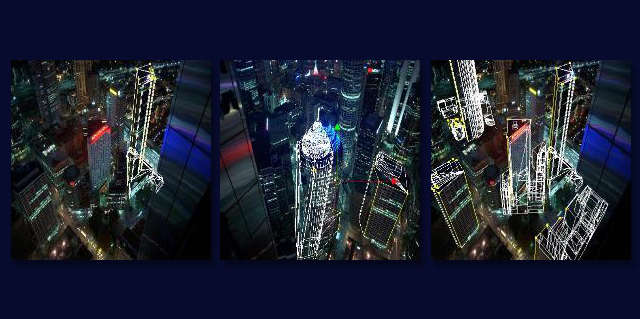

Essentially, these virtual background techniques allowed artists to take a large number of photographs of a specific location (for example, nighttime Sydney scenes on Mission: Impossible II) and then reproduce that location as a completely photorealistic render, in large part due to the high dynamic range imagery used, and, importantly, place actors or action – in this case a leaping Tom Cruise – within that virtual environment.

Of course, many people might recognize this as the way photogrammetry and texture mapping are now used for virtual set builds. The way Manex, and later Esc, approached the work – which also included previsualizing the action – was indeed a precursor to the virtual production pipelines now common in VFX filmmaking.

Coming on board a Mission film

In 2000, Andres Martinez, who had previously worked in his home country of Colombia before studying at the Academy of Art University in San Francisco, began his journey as part of the Manex/Esc virtual background innovations when he was employed at Manex to work on Mission: Impossible II. He was introduced to the company by his thesis advisor and FX lead at MVFX, Greg Juby. Martinez would later go on to be the virtual backgrounds supervisor on the Matrix sequels and Catwoman, which took the art and technology even further.

On Mission: Impossible II, Martinez’s role was to guide the virtual backgrounds team, and also bring together the techniques already spearheaded by Libreri, Borshukov and Piponi on The Matrix for image-based rendering of virtual environments.

“Kim Libreri had brought me on, and what I was able to do was I re-arrange some of the steps for the virtual backgrounds,” Martinez told befores & afters. “We got it to a place where it was just hours from taking the pictures to creating an environment. Nowadays there’s a button that does everything, but back then it was just the beginnings.”

Virtual background tech

The process on Mission: Impossible II worked like this: photographs of the Sydney location were digitized (they were scanned film rolls) and brought into Manex’s pipeline. These photographs informed the 3D geometry build, which also included generic and bespoke modeling. Manex’s system would map the ‘real’ photography onto the models.

“I ended up being responsible for acquiring the photography, which was the scanned film rolls. Once I had the scans, I created the HDRIs, utilized Shake scripts, created the directory structure, did the selects and then selected the artist who would be responsible for creating the sequences,” outlines Martinez, who singles out CG artists Enrique Vila and Carina Ohlund as the key creators of those virtual Sydney backgrounds.

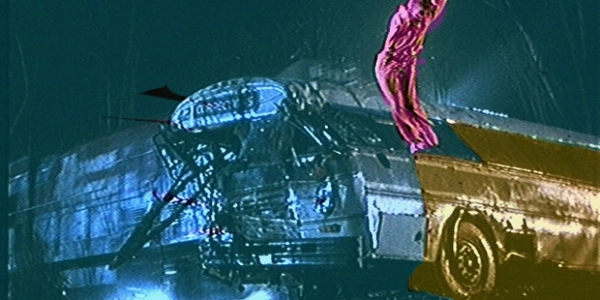

The resulting virtual environment could then be moved through 3D space and serve as background plates for greenscreen photography of Tom Cruise – as Ethan Hunt – making various aerial jumps, including for one main scene where he leaps out of a hole in a building and parachutes to the ground.

The Manex toolset to do this at the time was known as the real-time virtual system, or RTVS, effectively a plugin for Maya. Later, for the Matrix sequels, Dan Piponi re-wrote the virtual backgrounds system at Esc as an image analysis and photogrammetry tool called ‘Labrador’.

Making a movie

Ultimately, those virtual backgrounds only featured in a few Mission: Impossible II scenes, but they were highly memorable, says Martinez, for the audience and for himself. “Everything that we did on Mission: Impossible II was a stepping stone for larger projects. And certainly a ground zero to where I am now on virtual production projects.”

It was also an early lesson for Martinez – who has since gone on to work at companies including Sony Pictures Imageworks, Digital Domain, Method, Pixomondo and his own studio LosFX – on the realities of feature film production, as evidenced by a late addition to the shot count.

“The film was shipped but they came back for a reverse angle of Tom Cruise in the air where the camera tilted up as his chute opened,” details Martinez. “In that particular take, we see the boom, we see a couple of cranes. I was creating the environment for this shot, but the underside of the balconies on one of the buildings had never been built or for that particular scene the geometry did not exist – the alpha had a hole.”

“Next to me was a really good compositor, Dan Glass. I sent the shot to Dan and we were rushing to get it done, and he looks at me and says, ‘Andres, where’s the pixels for this?’ I’m like, ‘Oh no! There’s no time to re- render.’ I really felt like I screwed up. But Dan said, ‘Don’t worry, I can patch the alpha.’”

“Now I always tell people, every film has the last version, not the best version,” adds Martinez. “That’s what it takes sometimes to make a movie.”